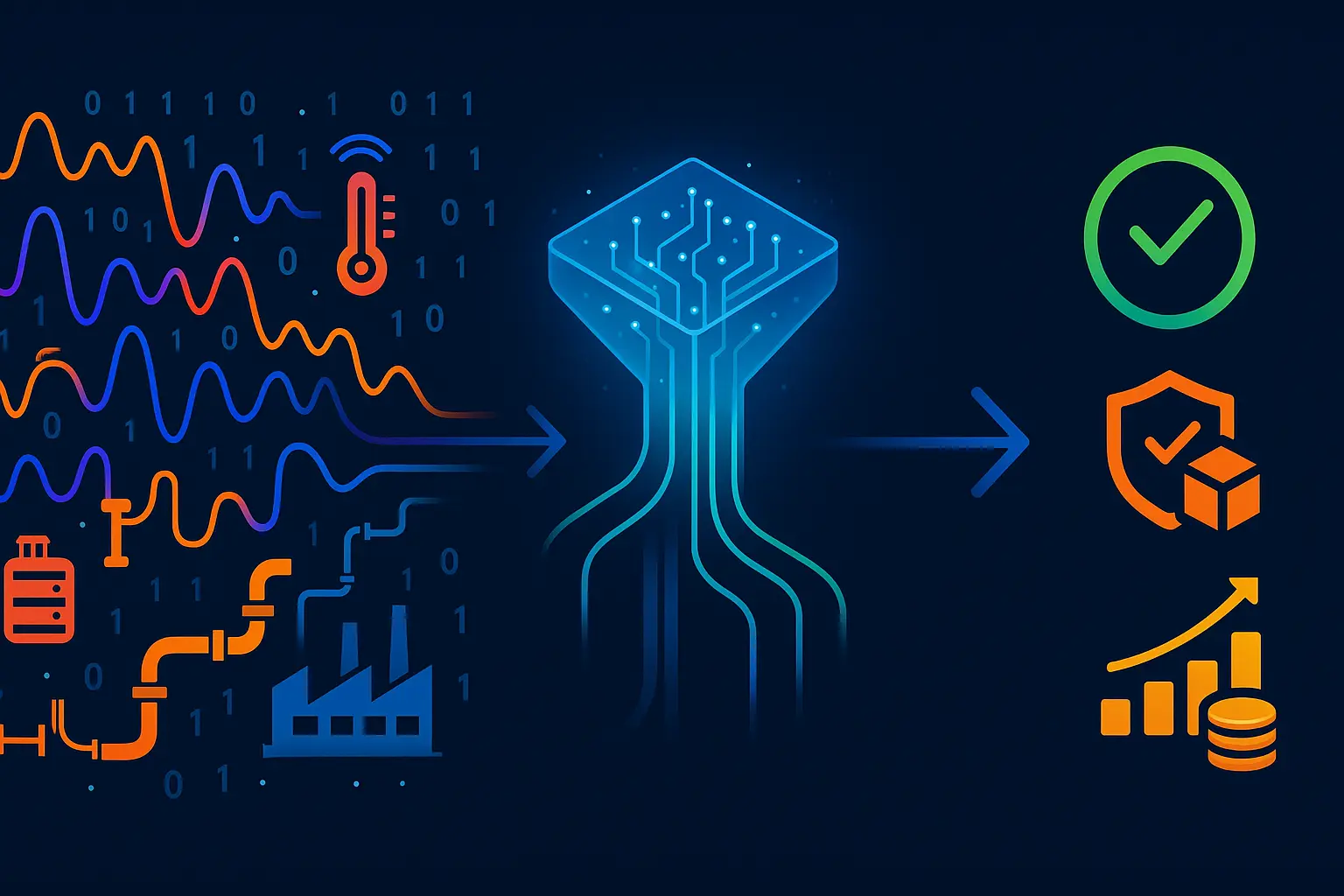

Imagine your operations team staring at hundreds of alerts every hour while a critical line in the plant stumbles, or your cloud bill spikes overnight and nobody knows which service caused it. That’s the reality of modern IT/OT environments: distributed systems, legacy controllers, edge sensors, and cloud services all produce mountains of logs, metrics, traces, events and change records — and most of it is noise. AIOps analytics is about turning that noisy stream into clear signals you can act on, so you get more uptime, better product quality, and measurable return on investment.

Put simply, AIOps analytics ingests diverse telemetry, correlates related events across IT and OT, and uses real‑time analytics plus historical machine learning to provide context. Instead of paging a person for every alert, it groups related alerts, points to the probable root cause, and — where safe — kicks off automated remediation or runbooks. That means fewer alert storms, faster mean time to repair, and fewer surprises during peak production.

Why now? Two reasons. First, hybrid and cloud-native architectures have grown so complex that traditional, manual operations don’t scale. Second, cost and sustainability pressures make downtime and waste unaffordable. The combination makes AIOps less of a “nice to have” and more of a practical necessity for teams that must keep equipment running, products within spec, and costs under control.

This article walks through what useful AIOps analytics actually does (not the hype), the capabilities that matter, how manufacturers capture value from predictive maintenance to energy optimization, and a practical reference architecture plus a 90‑day rollout plan you can follow. Read on if you want concrete ways to convert your noisy telemetry into predictable uptime, tighter quality control, and measurable ROI.

AIOps analytics, in plain language

What it does: ingest, correlate, and automate across logs, metrics, traces, and changes

AIOps platforms collect signals from everywhere your systems produce them: logs that record events, metrics that measure performance, traces that show request flows, and change records from deployments or configuration updates. They normalize and stitch these different signal types together so you can see a single incident as one story instead of dozens of disconnected alerts.

After ingesting data, AIOps correlates related happenings — for example, linking a spike in latency (metric) to a code deploy (change) and to error traces and logs from the same service. That correlation reduces noise, helps teams focus on the real problem, and drives automated responses: ticket creation, runbook execution, scaled rollbacks, or notifications to the right people with the right context.

How it works: real-time analytics plus historical ML for context

Think of AIOps as two layers working together. The first is real-time analytics: streaming rules, thresholds, and pattern detectors that surface incidents the moment they start. The second is historical intelligence: models trained on past behaviour that provide context — normal operating baselines, seasonal patterns, and known failure modes.

When those layers combine, the platform can do useful things automatically. It spots anomalies that deviate from learned baselines, explains why an anomaly likely occurred by pointing to correlated events and topology, and recommends or runs safe remediation steps. Importantly, good AIOps keeps a human-in-the-loop where needed, shows an explanation for any automated action, and logs both decisions and results for audit and improvement.

Why now: cloud complexity, hybrid estates, and cost pressure make manual ops untenable

Modern infrastructure is far more fragmented and dynamic than it used to be. Teams manage cloud services, on-prem systems, containers that appear and disappear, and OT devices in manufacturing or industrial networks — all of which generate vast, heterogeneous telemetry. The volume and velocity of that data outstrip what humans can reasonably monitor and correlate by hand.

At the same time, organizations face tighter budgets and higher expectations for uptime and product quality. That combination forces a shift from reactive firefighting to proactive, data-driven operations: detecting issues earlier, diagnosing root cause faster, and automating safe fixes so people can focus on higher-value work. AIOps is the toolkit that makes that shift practical.

With that foundation understood, it becomes easier to evaluate which platform features actually move the needle in production — and which are just marketing. Next we’ll dig into the specific capabilities to watch for when you compare solutions and build a rollout plan.

Capabilities that separate useful AIOps analytics from hype

Noise reduction and alert correlation that ends alert storms

True value starts with reducing noise. A useful AIOps solution groups related alerts into a single incident, suppresses duplicates, and surfaces a prioritized, actionable view. The goal is fewer interruptions for engineers and clearer triage paths for responders.

When evaluating vendors, look for multi-source correlation (logs, metrics, traces, events, and change feeds), fast streaming ingestion, and contextual enrichment so a single correlated incident contains relevant traces, recent deploys, and ownership information.

Root cause in minutes via dependency-aware event correlation and topology

Speedy diagnosis depends on causal context, not just pattern matching. Platforms that map service and infrastructure topology — and use it to score causal relationships — let teams move from symptom to root cause in minutes. That topology should include dynamic dependencies (containers, serverless, network paths) and static ones (databases, storage, OT equipment).

Practical features to demand: dependency-aware correlation, visual service maps with drill-downs to flows and traces, and explainable reasoning for any root-cause suggestion so operators can trust and validate automated findings.

Seasonality-aware anomaly detection and auto-baselining

Detection that treats every deviation as an incident creates more work, not less. The right AIOps models understand seasonality, business cycles, and operational baselines automatically, so anomalies are measured against realistic expectations instead of blunt thresholds.

Good solutions offer auto-baselining that adapts over time, configurable sensitivity for different signals and services, and the ability to attach business context (SLOs, peak windows) so alerts align with customer impact, not just metric variance.

Forecasting and capacity planning that prevent incidents

Beyond detection, mature platforms predict resource trends and failure likelihoods so teams can act before incidents occur. Forecasting should cover capacity (CPU, memory, IOPS), load patterns, and component degradation when possible, with what-if scenario analysis for planned changes.

Key capabilities include time-series forecasting with confidence intervals, workload simulation for deployments or traffic spikes, and automated recommendations (scale-up, reshard, reprovision) tied to cost and risk trade-offs.

Closed-loop runbooks with approvals, rollbacks, and ITSM integration

Automation that isn’t safe is dangerous. Effective AIOps ties detection and diagnosis to executable, auditable runbooks: safe playbooks that can be run automatically or after human approval, with built-in rollback, blast-radius controls, and integration with ITSM or CMMS for ticketing and change tracking.

Look for role-based approvals, canary and staged actions, complete audit trails, and bi-directional links to service tickets so automation improves MTTR without compromising governance or compliance.

Together, these capabilities distinguish platforms that actually reduce downtime and cost from those that mostly sell promise. Next, we’ll translate these technical features into concrete business outcomes and show where they most quickly pay back.

Where AIOps analytics unlocks value in manufacturing

Predictive + prescriptive maintenance: −50% unplanned downtime, −40% maintenance cost

AIOps turns raw machine telemetry—vibration, temperature, current, cycle counts, PLC/SCADA events—into failure forecasts and prioritized work. By combining streaming anomaly detection with historical failure patterns, platforms predict which asset will fail, why, and when to intervene with the least disruption.

When integrated with CMMS and maintenance workflows, those predictions become prescriptive actions: schedule a zone-level repair, order the right part, or run a controlled test. That reduces emergency repairs, shortens downtime windows, and shifts teams to planned, cost-effective maintenance.

“Automated asset maintenance solutions have delivered ~50% reductions in unplanned machine downtime and ~40% reductions in maintenance costs; implementations also report ~30% improvement in operational efficiency and a 20–30% increase in machine lifetime.” Manufacturing Industry Challenges & AI-Powered Solutions — D-LAB research

Process and quality optimization: −40% defects, +30% operational efficiency

Linking OT sensors, vision systems, SPC metrics, and process parameters gives AIOps a full view of production quality. Correlating small shifts in sensor patterns with downstream defects lets you detect process drift before scrap or rework increases.

Actions can be automated or recommended: adjust setpoints, slow a line, trigger local inspections, or route product through a different quality gate. The result is fewer defects, higher yield, and more consistent throughput without throwing more people at the problem.

Energy and sustainability analytics: −20% energy cost, ESG reporting by design

AIOps ingests energy meters, HVAC controls, machine utilization and production rate to optimize energy per unit. It finds inefficient sequences, detects leaks or waste, and suggests schedule changes that shift heavy loads to lower‑cost hours or balance thermal systems more efficiently.

Because AIOps ties operational metrics to production output, it can produce energy-per-unit KPIs and automated ESG reports—turning sustainability from a compliance checkbox into a measurable cost lever.

Supply chain sense-and-respond: −40% disruptions, −25% logistics costs

When factory status, inventory levels, and supplier events are correlated in real time, operations can react faster to upstream shocks. AIOps can surface signals — slowed cycle times, rising scrap, delayed inbound shipments — and kick off mitigation playbooks: reprioritise orders, reroute batches, or change packing/transport modes.

That tighter feedback loop lowers buffer inventory needs, reduces rush logistics, and preserves customer SLAs by turning raw telemetry into automated, auditable responses across OT, ERP, and logistics systems.

Digital twins and lights-out readiness: simulate before you automate (+30% output, 99.99% quality)

Digital twins let teams validate process changes, control strategies, or maintenance plans in a virtual replica fed by real telemetry. Coupled with AIOps, twins can run what-if scenarios (new shift patterns, increased throughput, component degradation) and surface risks before changes hit the shop floor.

“Lights-out factories and digital twins have been associated with ~99.99% quality rates and ~30% increases in productivity; digital twins additionally report 41–54% increases in profit margins and ~25% reductions in factory planning time.” Manufacturing Industry Disruptive Technologies — D-LAB research

These use cases are complementary: predictive maintenance keeps assets available, process optimization keeps quality high, energy analytics reduces cost per unit, supply‑chain sensing stabilizes flow, and digital twins let you scale automation safely. To capture these benefits in production you need a cleaned and connected data foundation, service and asset context, and safe automation policies—a practical blueprint and phased rollout make those elements real for plant teams.

Thank you for reading Diligize’s blog!

Are you looking for strategic advise?

Subscribe to our newsletter!

AIOps analytics reference architecture and a 90‑day rollout plan

Data foundation: connect observability, cloud events, ITSM, CMDB—and OT sources (SCADA, PLCs, historians)

Start with a single unified data plane that can accept high‑velocity telemetry (metrics, traces, logs), event streams (cloud events, deployment and change feeds), and OT signals (SCADA, PLCs, historians). The foundation should normalize and tag data at ingest so every signal can be tied to an asset, service, location, and owner.

Essential capabilities: streaming ingestion with backpressure handling, light-weight edge collectors for plant networks, secure connectors to ITSM and CMDB for enrichment, and consistent timestamping and identity resolution so cross-source correlation is reliable.

Service map and context: topology, dependencies, and change data for causality

Build a living service and asset map that represents runtime dependencies (services, networks, databases, PLCs) as well as change history (deploys, config edits, maintenance work). This map is the “lens” AIOps uses to reason about causality—so invest in automated discovery plus manual overrides for accurate ownership and critical-path flags.

Expose the map to operators via visual topology views and APIs so correlation engines, runbooks, and alerting can reference explicit dependency paths and change timelines when prioritizing and explaining findings.

Models and policies: baselines, anomaly rules, SLOs, and enrichment

Layer lightweight real-time rules (thresholds, rate-of-change) with historical models that auto-baseline expected behaviour for each signal and service. Complement detection with business-aware policies—SLOs, maintenance windows, and seasonality—that reduce false positives and align alerts to customer impact.

Policy management should live alongside model configuration, with versioning, testing environments, and labelled training data so models can be audited and iterated safely.

Automate the top 5 incidents: safe runbooks, approvals, and CMMS/Maximo integration

Identify the five incident types that drive most downtime or manual effort and implement closed-loop runbooks for them first. Each runbook should include: a playbook description, pre-checks, graded actions (observe → remediate → escalate), canary stages, rollback steps, and explicit approval gates.

Integrate runbooks with ITSM/CMMS so automation creates or updates tickets, attaches evidence, and records outcomes. Enforce role-based approvals and blast-radius controls so automation reduces mean time to repair without exposing the plant to unsafe actions.

Prove ROI: track MTTR, ticket volume, downtime, OEE, and energy per unit

Define a compact set of success metrics before you start and collect baseline values. Useful KPIs include MTTR, alert/ticket volume, incident frequency, production downtime, OEE (or equivalent throughput measures), and energy-per-unit for energy-related initiatives. Instrument dashboards that show current state, trend, and the contribution of AIOps-driven actions.

Use short, measurable hypotheses (for example: “correlation reduces duplicate alerts for Service X by Y%”) to validate both technical and business impact during the rollout.

The 90‑day phased rollout

Phase 1 — Weeks 0–4: kickoff and foundation. Assemble a small, cross-functional team (ops, OT/engineers, security, and a product owner). Complete source inventory, deploy collectors to high-value systems, sync CMDB and ownership data, and enable a read-only service map. Deliverable: a working data pipeline and a shortlist of top-5 incident types.

Phase 2 — Weeks 5–8: detection and context. Deploy auto-baselining and initial anomaly rules against a subset of services and assets. Implement dependency-aware correlation for those assets and create the first runbook templates. Integrate with ITSM for ticket creation and notifications. Deliverable: validated detections and one automated, human‑approved runbook in production.

Phase 3 — Weeks 9–12: automation, measurement, and scale. Harden runbooks with staged automation and rollback, expand coverage to remaining critical assets, and enable forecasting/capacity features where useful. Finalize dashboards, define handover processes, and run a formal review of ROI against baseline metrics. Deliverable: production-grade automation for top incidents and a business impact report for stakeholders.

Governance, safety, and continuous improvement

Throughout rollout enforce security and compliance: encrypted transport, least-privilege access, retention policies, and audit trails for every automated action. Treat automation like a controlled change: canary actions, approval workflows, and post-mortem learning loops. Schedule regular cadences (weekly ops reviews, monthly model retraining and policy reviews) to keep detections accurate and playbooks current.

With a validated architecture and early wins in hand, you’ll be ready to compare platforms against real operational needs and prioritize tooling decisions that lock in those gains and drive technology value creation.

Tooling landscape and buying checklist

Coverage and openness: APIs, streaming, edge agents, and data gravity

Choose platforms that meet your deployment reality rather than forcing you to reshape operations. Key signals of fit:

Correlation at scale with explainability (not black-box alerts)

Correlation quality separates marketing claims from operational value. Don’t accept opaque AI: demand explainable correlations and evidence that links alerts to probable causes.

Automation safety: blast-radius controls, canary actions, and audit trails

Automation must be powerful and constrained. Verify the platform’s safety primitives before you enable autonomous remediation.

Cost governance: ingest economics, retention tiers, and data minimization

Telemetry costs can outpace value if not governed. Make economics part of the buying conversation.

Security alignment: ISO 27001/SOC 2/NIST controls and role-based access

Customer outcomes to demand: MTTR down, false positives down, OEE up

Vendors should sell outcomes, not only features. Ask for measurable, contractable outcomes and proof points:

When comparing tools, score them not only on immediate feature match but on long-term operability: how they fit your data topology, how safely they automate, and how clearly they demonstrate business impact. With that scorecard in hand you can short-list vendors for a focused, metric-driven pilot that proves whether a platform will deliver uptime, quality, and ROI.