Private equity deals live and die on two things: the story you sell at acquisition, and the numbers you prove at exit. Operations consulting sits between those moments — it’s the practical, hands‑on work that turns a growth thesis into real cash and a cleaner EBITDA story. When investors need results fast, operational fixes often deliver the quickest, most reliable upside.

This article walks through the specific value levers that move EBITDA in 6–12 months — not vague strategy, but repeatable interventions you can measure and track. You’ll see how pricing and packaging, retention and net revenue retention (NRR), sales efficiency, throughput and maintenance, SG&A automation, and working‑capital optimization each produce clear line‑item effects on profit and cash. For each lever we explain what to fix first, what to expect, and how to size the upside so the board and LPs can see progress every week.

If you’re short on time, read this as a practical checklist: levers to prioritize in the first 100 days, the quick diagnostics that prove impact, and the reporting cadence that keeps everyone aligned toward a stronger, exit‑ready multiple.

- Pricing & deal economics: small price or mix moves that lift average order value and margin immediately.

- Retention & NRR: reduce churn and increase lifetime value with targeted success and support automation.

- Sales & deal velocity: smarter outreach and intent data to close more deals faster.

- Throughput & reliability: operational fixes and predictive maintenance to raise output and cut downtime.

- SG&A automation: reclaim capacity and reduce costs by removing manual work.

- Working capital: inventory and receivable improvements that free cash without harming growth.

Read on to see how to size each lever, build a sequenced 100‑day plan, and put straightforward metrics in front of stakeholders so operational progress becomes an investable, exitable story.

What great private equity operations consulting looks like today

Operational due diligence that quantifies pricing power, churn, and throughput

Excellent ops consulting begins with diligence that is diagnostic and quantifiable. Teams map causal links between commercial levers (pricing, packaging, sales motion), retention dynamics (why customers leave or expand) and operational throughput (capacity, cycle times, bottlenecks), then translate those links into stress‑tested scenarios that feed the investment thesis. The output is not a checklist but a small set of tested hypotheses with measurable KPIs and clear data gaps to close during early engagement.

A 100-day plan tied to the investment thesis and cash impact

Top-tier teams convert diligence outcomes into a focused 100‑day playbook that prioritizes actions by cash and EBITDA impact, implementation complexity, and owner alignment. That plan allocates accountability (who owns delivery), sets temporary governance (decision rights and escalation paths), and sequences initiatives so quick wins free up resources for larger, higher‑value programs. Each sprint closes with a reconciled cash forecast so leaders can see a direct link from execution to liquidity and valuation.

Operator-plus-technologist team for field execution, not slideware

Effective engagements pair experienced operators—people who have run P&Ls, led transformations and managed teams—with technologists who can build, instrument and scale solutions in the business environment. The emphasis is on short, iterative deployments in production environments: pilot, measure, harden, then scale. Deliverables are working processes, dashboards and automation that front‑line teams use daily—not presentation decks that never leave the conference room.

Weekly KPI cadence: EBITDA bridge, cash conversion, and customer health

Great operating partners establish a tight cadence of weekly reviews focused on a compact dashboard: an EBITDA bridge that explains variance, a cash conversion tracker that highlights working capital and capex movement, and customer health signals that predict retention and expansion. These reviews use a common data model, spotlight exceptions, and convert insights into owner-assigned actions with clear deadlines so momentum is preserved and course corrections are fast.

With those building blocks—diagnostic rigor, a cash‑focused plan, operator+tech delivery and a disciplined KPI rhythm—teams are set up to move quickly from insight to measurable improvement. The next part of the post walks through the concrete value levers you can deploy in the near term to convert that operational foundation into realized EBITDA uplift.

Six levers that move EBITDA in 6–12 months

Retention and NRR: AI sentiment, success platforms, and GenAI support cut churn 30% and lift revenue 20%

“GenAI call‑centre assistants and AI‑driven customer success platforms consistently demonstrate material retention benefits: acting on customer feedback can drive ≈20% revenue uplift, while GenAI support and success tooling can reduce churn by around 30% — a direct lever on Net Revenue Retention and short‑term EBITDA (sources: Vorecol; CHCG).” Portfolio Company Exit Preparation Technologies to Enhance Valuation — D-LAB research

How to act: instrument voice-of-customer and product-usage signals quickly, surface at-risk accounts with a health score, and deploy playbooks that combine proactive outreach with AI-assisted support to capture upsell moments. Start with a 30–60 day pilot on a high-value cohort, measure NRR and churn delta, then scale retention playbooks into renewals and CX workflows.

Deal volume: AI sales agents and buyer intent data lift close rates 32% and shorten cycles 40%

“AI sales agents and buyer‑intent platforms improve pipeline efficiency and conversion — studies and practitioner data show ~32% higher close rates and sales‑cycle reductions in the high‑teens to ~40% range, while automating CRM tasks saves ~30% of reps’ time and can double top‑line effectiveness in pilot programs.” Deal Preparation Technologies to Enhance Valuation of New Portfolio Companies — D-LAB research

How to act: deploy AI agents to automate lead enrichment, qualification and outreach while integrating buyer‑intent feeds to prioritise high-propensity prospects. Pair lightweight pilots (one product line or region) with rigorous funnel metrics so sellers convert higher-quality leads faster and marketing can reallocate spend to the most effective channels.

Deal size: recommendations and dynamic pricing boost AOV up to 30% and add 10–15% revenue

Recommendation engines and dynamic pricing are immediate levers to increase average order value and margin capture. Implement a recommendation pilot in the checkout or sales enablement flow to lift cross-sell and bundling rates, and run a parallel dynamic‑pricing model on a subset of SKUs or segments to capture willingness-to-pay. Combine experiments with guardrails (price floors, contract rules) and monitor uplift by cohort to move from pilot to portfolio-wide rollout.

SG&A efficiency: workflow automation, co-pilots, and assistants remove 40–50% manual work

Automating repetitive tasks across sales, finance, and customer support can materially compress SG&A. Target high‑volume, low‑complexity activities first—CRM updates, invoice processing, routine reporting—then introduce AI co‑pilots to accelerate knowledge work. Focus on measurable time saved, redeploying staff to revenue‑generating or retention activities rather than broad headcount cuts.

Throughput: predictive maintenance and digital twins raise output 30% and cut downtime 50%

For industrial or production-heavy businesses, predictive maintenance and digital twins unlock rapid uptime and yield improvements. Start by instrumenting critical assets, deploy anomaly detection and prescriptive alerts, and run quick validation sprints to prove reduced downtime. Once validated, scale across the fleet to convert improved utilization into visible margin expansion.

Working capital: inventory and supply chain optimization reduce costs 25% and obsolescence 30%

Working-capital levers are low-friction ways to free cash and improve cash conversion. Use demand-driven planning, multi-echelon inventory optimization and SKU rationalization to cut carrying costs and obsolescence. Tighten supplier terms where possible and bundle procurement for scale—small improvements in inventory turns translate quickly into EBITDA and liquidity.

Each of these levers is actionable inside a 6–12 month window when you combine clear measurement, focused pilots and an operator-led rollout. The practical question becomes sequencing: which levers to prioritise first, how to size expected impact, and how to run delivery with owners in the business — next we show a rapid engagement approach that answers exactly that and keeps execution fast and measurable.

Our engagement model, built for speed

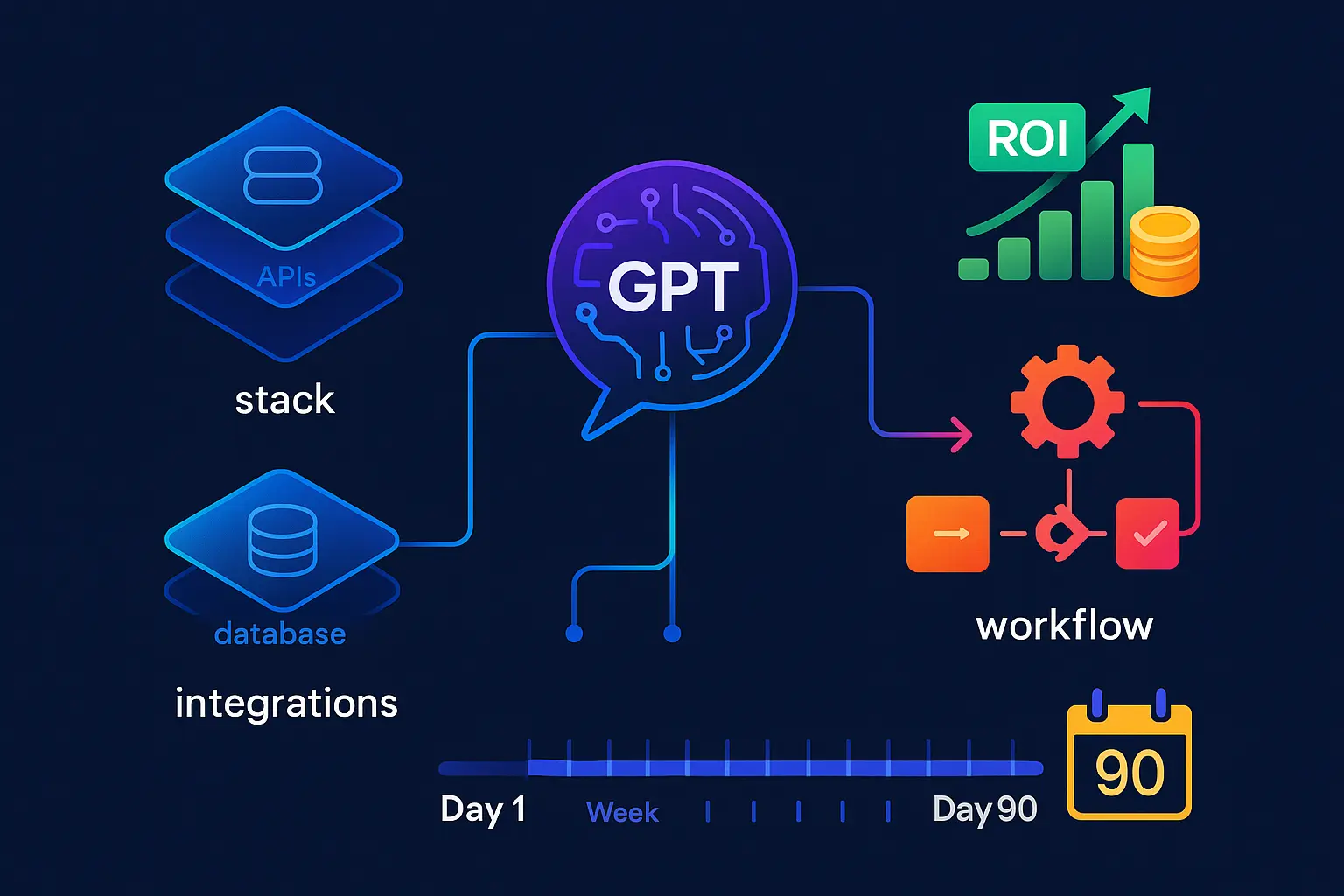

Pre-deal ops and tech diligence in 2–3 weeks, including cyber and IP risk

We compress early diligence into a focused 2–3 week sprint that surfaces the critical operational and technology risks and the highest‑probability value levers. Deliverables include a prioritized risk heatmap (cyber, IP, vendor concentration), a short list of quick-win initiatives, and a data‑readiness checklist so post-close work can begin immediately with minimal ramp.

Six-week diagnostic to size each lever and build a sequenced roadmap

The diagnostic phase turns hypotheses into sized opportunities. Over six weeks we ingest a subset of commercial, product and operational data, run targeted analytics to estimate EBITDA and cash impact, and produce a sequenced roadmap that balances fast cash impact with medium‑term capability builds. Each initiative in the roadmap is scored by impact, effort, required tech, and owner.

100-day sprint with owner alignment and operating partner cadence

The first 100 days are treated as an execution sprint: clear owners, a weekly operating cadence, and an embedded operating partner who coordinates pilots, removes blockers and ensures handoffs. The sprint focuses on piloting the highest‑value levers, proving outcomes with real data, and hardening processes so wins are repeatable after the sprint ends.

Value tracking: dashboarding NRR, AOV, cycle time, and working capital

Fast engagements require fast measurement. We deliver a compact dashboard that tracks the handful of KPIs tied to the investment thesis—examples include Net Revenue Retention, average order value, key cycle times and working capital metrics—so leadership can see the EBITDA bridge evolve weekly and validate which initiatives to scale.

Running these phases in tight sequence—diligence, diagnostic, 100‑day delivery and continuous value tracking—keeps the program focused on cash and EBITDA while minimizing disturbance to the business. Once execution is proving the plan, the natural next focus is locking those gains in place by addressing the technical and legal controls that preserve value through exit, which we cover next.

Thank you for reading Diligize’s blog!

Are you looking for strategic advise?

Subscribe to our newsletter!

Protect the multiple: IP, data, and cybersecurity that de-risk the story

Monetize and protect IP: registries, licensing options, and freedom to operate

Start by treating IP as a balance‑sheet asset. Run a rapid IP inventory: catalog patents, trademarks, copyrights, proprietary algorithms, key business processes and embedded know‑how. Map ownership (employee and contractor assignments), third‑party dependencies and any open‑source exposures that could block sale or licensing.

Next, size commercial options: which assets can be licensed, franchised or carved out to create new revenue streams? Prioritise low‑friction moves (registries, standard license templates, defensive filings) that increase perceived scarcity and create narrative points for buyers. Finally, perform a freedom‑to‑operate review to identify infringement risks and remediate early — buyers discount for unresolved legal exposure; sellers benefit by removing that haircut.

Security that sells: ISO 27002, SOC 2, and NIST 2.0 proof points in enterprise deals

“Cybersecurity and compliance materially de‑risk exits: the average data breach cost was $4.24M in 2023 and GDPR fines can hit up to 4% of annual revenue. Frameworks like ISO 27002, SOC 2 and NIST 2.0 not only raise buyer confidence but have delivered tangible commercial outcomes (e.g., a firm secured a $59.4M DoD contract despite being $3M more expensive after adopting NIST).” Portfolio Company Exit Preparation Technologies to Enhance Valuation — D-LAB research

Use that reality to prioritize controls that buyers actually ask for. Run a focused security gap assessment mapped to the frameworks most valued by your buyer set (enterprise customers may ask for ISO 27002 or SOC 2; defense or government work will point to NIST). Deliverables to investors should include a prioritized remediation plan, an evidence pack (policies, pen‑test reports, access logs), and an incident‑response capability so the company can demonstrate both prevention and recovery.

Practical quick wins: implement least‑privilege IAM, encrypt sensitive data at rest and in transit, formalise vendor security reviews, and automate basic monitoring and alerting so you can present operational telemetry during diligence rather than promises.

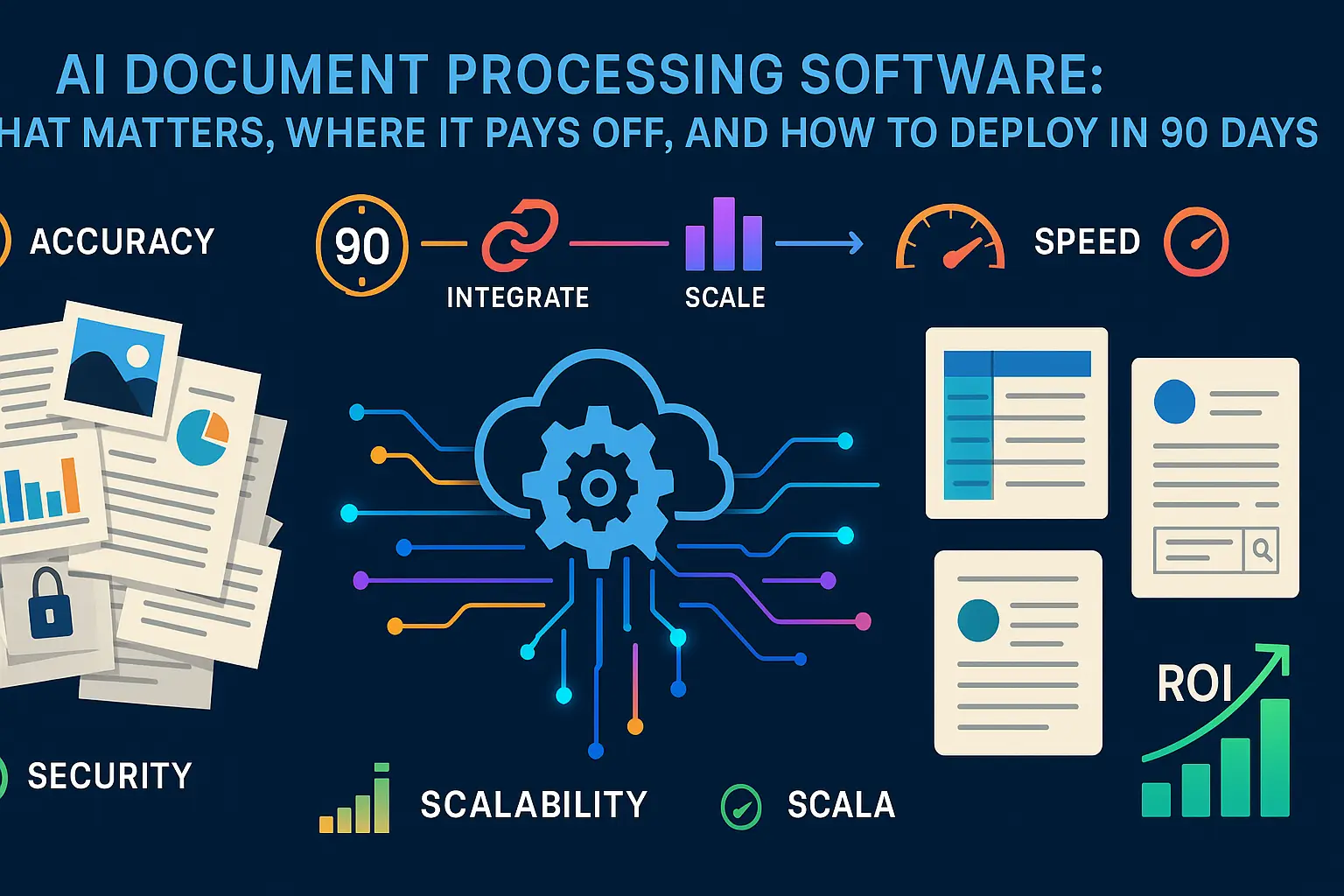

Data readiness for personalization and pricing: governance, access, and quality

Data is both a value driver and a risk. Prepare it so buyers can underwrite revenue uplift from personalization or dynamic pricing without fearing compliance or quality surprises. Build a lightweight data catalog, define ownership and lineage for revenue‑critical datasets, and run data‑quality checks on the metrics buyers will look at (NRR, churn, AOV, conversion rates).

Enable safe experimentation: add feature flags, consent tracking and a reproducible A/B framework so any claimed uplift can be demonstrated and audited. Wherever pricing power is material, capture price elasticity tests and the data that supports dynamic‑pricing algorithms; that evidence converts operational gains into credible valuation upside.

Taken together—clear IP ownership and optional monetization routes, framework‑backed security evidence, and audited data that supports growth claims—these measures transform execution gains into a de‑risked story acquirers can buy into. With the controls in place, the final task is to turn operational improvements and evidence into a crisp, sellable narrative that buyers can validate quickly.

Exit-ready operations: turn execution into a sell-side narrative

Before-after bridge: prove run-rate EBITDA, market share, and retention gains

Build a concise before/after bridge that ties every claimed improvement to a verifiable driver. Start with a defensible run‑rate baseline (normalized for one‑offs and seasonality), then show incremental EBITDA by initiative with clear attribution: revenue uplift, cost reduction, or working‑capital release. For retention and market share claims link cohort analyses and customer-level evidence (renewal rates, churn cohorts, usage) so uplift is reproducible under diligence rather than anecdotal.

Keep the bridge transparent: show assumptions, sensitivity ranges and the minimum set of controls or behaviours required to sustain the gains post‑close. Buyers value repeatability — a bridge that maps to observable, auditable metrics is far more persuasive than one built on broad statements.

Sell-side diligence pack: process capability, KPI dashboards, and security attestations

Prepare a compact, buyer‑facing diligence pack focused on what acquirers actually validate. Include (1) process maps and SOPs for critical functions, (2) a handful of KPI dashboards tied to the bridge (with data lineage notes), and (3) evidence of controls: contracts, third‑party attestations, security policies and incident logs where relevant. Make the pack navigable: an executive summary, a folder index, and one‑page evidentiary pages for each major claim.

Design the pack for rapid validation: prioritize primary evidence (system exports, signed contracts, audited reports) over narrative. That reduces follow‑up questions, shortens due diligence timelines and preserves leverage during negotiations.

Minimize TSAs and carve-out risk with standardized, automated processes

Reduce the need for lengthy transitional services agreements by standardizing and automating core interactions before exit. Identify dependencies (shared systems, key suppliers, finance close processes), then design clean handoffs: segregated environments, templated supplier amendments, and runbooks for month‑end and customer management activities. Where separation is costly, create short, well‑scoped TSAs with clear SLAs and exit triggers.

Practical tactics include extracting minimal data sets to run parallel validation, automating recurrent reconciliations to eliminate manual handover steps, and documenting knowledge transfer in bite‑sized playbooks. The result: lower buyer integration risk, fewer negotiations over post‑close support, and a smoother closing timeline.

When execution is converted into clear, auditable evidence and packaged in a buyer‑centric way, operational gains stop being internal wins and become tangible valuation drivers — the final step is turning that evidence into a sellable story that a buyer can validate quickly and confidently.