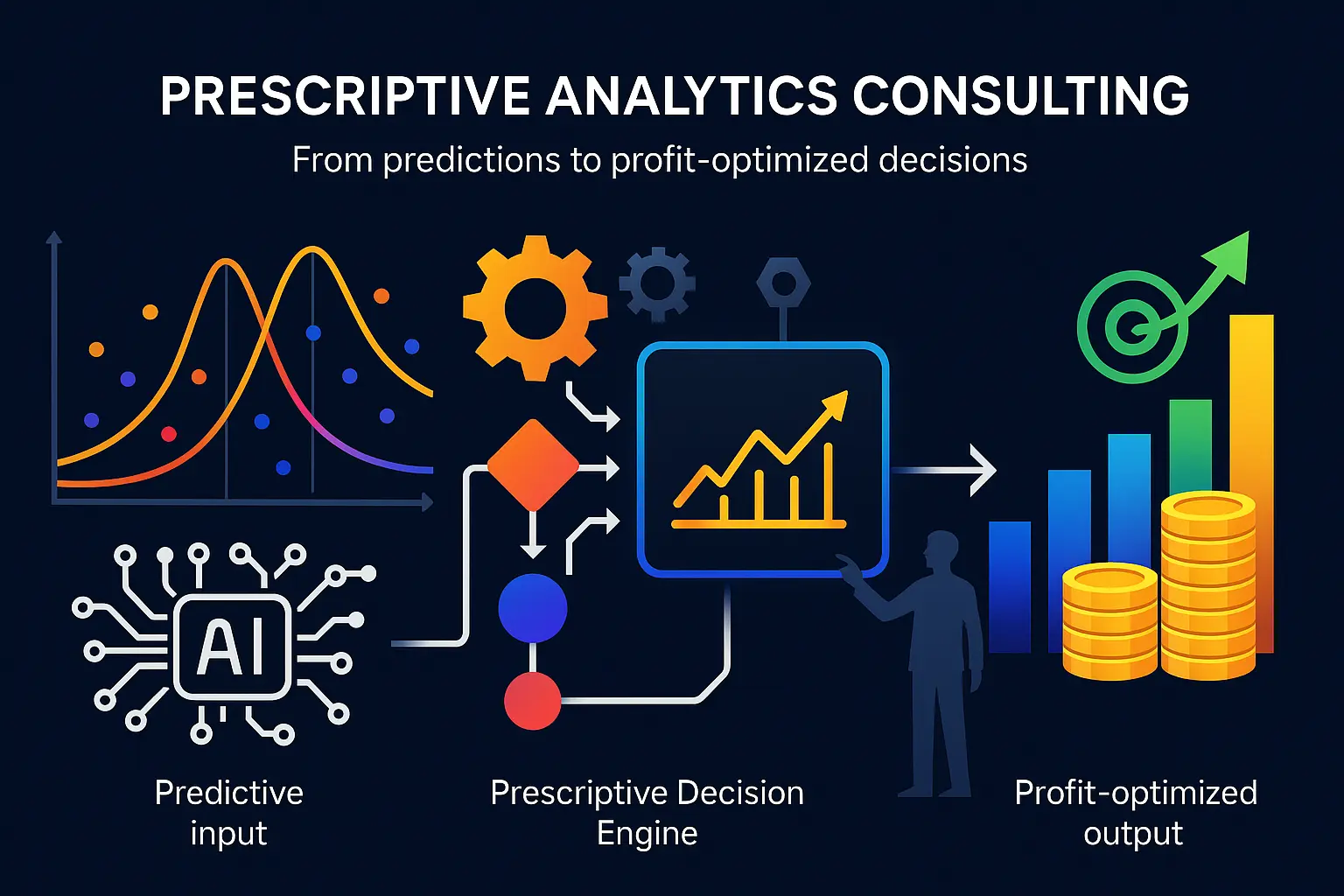

Most analytics stops at “what happened” or “what will probably happen.” Prescriptive analytics takes the next — and much harder — step: it says what to do. It turns forecasts into concrete, constrained decisions that balance revenue, cost, risk and customer impact so teams can act with confidence instead of guessing.

Think of prescriptive analytics as decision engineering. It combines forecasts with optimization, simulation and policy logic (and increasingly reinforcement learning) to recommend—or even automate—the best course of action given real‑world limits: budgets, inventory, legal rules and human approvals. The goal isn’t prettier dashboards; it’s profit‑optimized, auditable choices that leaders can trust.

Why now? Data is richer, models are faster, and business environments change in minutes instead of months. That makes black‑box predictions useful but incomplete. Organizations that connect those predictions to clear objective functions and governance capture measurable value: smarter pricing, smarter retention plays, fewer operational failures, and tighter security decisions that protect value and buyer confidence.

In this article you’ll get a practical primer: what prescriptive analytics really is, the core methods (optimization, simulation, causal tools and RL), the decision inputs you must capture, quick wins by function (pricing, retention, operations, risk), a 90‑day consulting playbook to earn executive trust, and the outcomes that move valuation—not just dashboards.

If you’re responsible for a high‑stakes decision — commercial strategy, supply chain resilience, or security posture — read on. This is about turning data and models into decisions that actually improve the bottom line and can be measured at exit.

What prescriptive analytics is—and why it matters now

Prescriptive analytics turns insight into action. Where descriptive analytics summarizes what happened and predictive analytics forecasts what will likely happen, prescriptive analytics recommends the specific choices that maximize business objectives given real-world limits. It’s the layer that closes the loop between data and decisions—so organizations don’t just know the future, they act on it optimally.

From descriptive and predictive to prescriptive: the leap to action

Descriptive tells you the story, predictive gives you a forecast, and prescriptive hands you the playbook. The leap to prescriptive is behavioural: it replaces manual judgment and one-size-fits-all rules with context-aware, measurable recommendations that account for competing goals (profit vs. service levels, speed vs. cost) and the fact that actions change outcomes. That makes prescriptive systems ideal for high-stakes, repeatable decisions where consistent, explainable trade-offs improve results over time.

Core methods: optimization, simulation, causal inference, reinforcement learning

Optimization is the workhorse: mathematical programs (linear, integer, nonlinear) translate objectives and constraints into a best-possible plan—think price schedules, schedules, or inventory policies that maximize margin or minimize cost.

Simulation lets teams model complex systems and stress-test candidate policies before committing—useful when outcomes are stochastic or when interventions have delayed effects.

Causal inference separates correlation from cause, ensuring prescriptive actions target levers that actually move the metric you care about (e.g., which retention tactics reduce churn versus merely correlate with it).

Reinforcement learning (RL) learns policies from interaction data for problems where decisions and outcomes form long-running feedback loops—RL shines in dynamic personalization, real-time bidding, and sequential maintenance decisions.

Decision inputs you need: forecasts, constraints, costs, risks, and trade‑offs

Prescriptive models consume more than a point forecast. They need probabilistic forecasts or scenario trees to represent uncertainty, explicit constraints (capacity, budgets, regulations), and accurate cost or reward models for actions. Risk preferences and business rules turn a theoretical optimum into an operational one: a solution that’s legal, auditable, and aligned with stakeholders.

Good deployment design also codifies guardrails—approval gates, human-in-the-loop overrides, and rollback paths—so decision recommendations become trusted tools rather than black-box edicts.

Data, privacy, and IP protection baked in (ISO 27002, SOC 2, NIST 2.0)

Security and IP stewardship aren’t an afterthought for prescriptive systems; they’re foundational. Reliable decisioning depends on trustworthy data flows, clear provenance, and controls that prevent leakage of models or strategic data. Integrating strong information-security frameworks into both development and deployment derisks automation and increases buyer and stakeholder confidence.

“IP & Data Protection: ISO 27002, SOC 2 and NIST frameworks defend against value‑eroding breaches — the average cost of a data breach in 2023 was $4.24M, and GDPR fines can reach up to 4% of annual revenue — so compliance readiness materially derisks investments and boosts buyer trust.” Portfolio Company Exit Preparation Technologies to Enhance Valuation — D-LAB research

With the methods, inputs, and controls in place, teams can move from experimentation to measurable, repeatable decisioning—next we’ll map the specific business areas where prescriptive analytics tends to deliver the fastest, highest-value wins.

Where prescriptive analytics pays off fastest

Prescriptive analytics delivers outsized returns where decisions are frequent, measurable, and directly tied to financial or operational objectives. The highest-impact areas share three traits: clear objective functions (revenue, cost, uptime), available data and systems to act on recommendations (CRM, pricing engines, MES/ERP), and a governance model that lets models influence outcomes quickly and safely. Below are the domains that typically produce the fastest, most defensible value.

Revenue engines: dynamic pricing, bundling, deal configuration, next‑best‑offer

Revenue processes are prime candidates because they generate immediate, measurable financial outcomes every time a decision is applied. Prescriptive analytics optimizes price points, recommends product bundles, and configures deals by balancing margin, conversion probability, and inventory or capacity constraints.

Operationalizing these recommendations—embedding them into the checkout flow, sales desk, or CPQ system—turns model outputs into recurring uplifts rather than one-off insights. The short feedback loop between action and revenue enables rapid experimentation and continuous improvement.

Retention: next‑best‑action, CS playbooks, sentiment‑driven outreach

Retention problems are often high-leverage: small improvements in customer churn or expansion can compound dramatically over time. Prescriptive systems prioritize accounts, prescribe tailored outreach scripts or offers, and recommend escalation paths based on predicted lifetime value, usage signals, and sentiment.

Because interventions (emails, offers, agent scripts) can be A/B tested and instrumented, prescriptive initiatives here produce clear causal evidence of impact, which accelerates executive buy-in and scaling across segments.

Operations: factory scheduling, inventory optimization, prescriptive maintenance

Operational domains—plant scheduling, inventory replenishment, and maintenance—are where constraints matter most. Prescriptive analytics formalizes those constraints and trade‑offs into optimization problems so planners get schedules and reorder decisions that maximize throughput, reduce shortage risk, and minimize cost.

These systems often integrate with existing ERP/MES and IoT feeds, allowing automated decision execution or tightly supervised human-in-the-loop workflows. The result: tangible reductions in downtime, stockouts, and expedited freight spend as recommendations convert directly into physical outcomes.

Risk & cybersecurity: policy tuning, incident response decisioning, access controls

Risk and security teams benefit from prescriptive approaches because the cost of false positives and false negatives is explicit. Analytics can recommend policy thresholds, prioritize incident responses, and automate access decisions to minimize exposure while preserving business flow.

Prescriptive rules paired with scoring let teams balance risk appetite against operational tolerance, and because incidents generate logged outcomes, teams can rapidly measure whether policy changes reduce time-to-detect, time-to-contain, or costly escalations.

In all these areas the fastest wins come from pairing a focused decision objective with a reproducible execution path: clear metrics, integrated systems that can apply recommendations, and experiments that prove causality. That combination makes it practical to design a short, high‑confidence rollout that demonstrates value to executives and users alike—and primes the organization for systematic scale.

A 90‑day prescriptive analytics consulting plan that earns executive trust

This 90‑day plan is built to deliver measurable wins fast while establishing the governance, transparency, and operational plumbing executives need to sign off on scale. The sequence focuses on: (1) mapping the decision and its constraints; (2) delivering a working predictive + decisioning prototype; (3) deploying with human oversight and auditable controls; (4) proving value through controlled experiments; and (5) preparing production-scale MLOps and optimization embedding. Each phase is time‑boxed, outcome‑driven, and tied to clear KPIs so leadership can see risk and reward in real time.

Map high‑stakes decisions and constraints; define the objective function

Week 0–2: convene a short steering committee (CRO/COO/Head of Data + 2–3 stakeholders) and run decision‑mapping workshops. Identify the one or two high‑frequency, high‑value decisions to optimize, capture the objective function (e.g., margin vs conversion, uptime vs cost), and list hard constraints (capacity, regulation, SLAs).

Deliverables: a one‑page decision spec (objective, constraints, KPIs), a prioritized backlog of supporting data sources, and an explicit acceptance criterion executives can sign off on (target KPI uplift and acceptable downside scenarios).

Build the predictive layer and connect it to decision logic (rules + optimization)

Week 3–6: create lightweight, reproducible predictive models and a minimal decision engine. Parallelize work: data engineers build a curated feature set and connectors while data scientists prototype probabilistic forecasts. Decision scientists translate the objective function into rules and/or an optimization formulation and produce candidate policies.

Deliverables: baseline model metrics, an API/endpoint that returns predictions and recommended actions, and a test harness that simulates decisions under sampled scenarios so stakeholders can compare candidate policies.

Governed deployment: human‑in‑the‑loop, approvals, audit trails, rollback

Week 7–9: design the governance layer before wide rollout. Implement human‑in‑the‑loop gates, approval matrices, and explainability notes for each recommended action. Add audit trails, versioned model artifacts, and a clear rollback plan to revert to safe defaults if KPIs degrade.

Deliverables: a staged deployment plan (sandbox → pilot → controlled release), role‑based access controls, an incident response / rollback runbook, and a short training session for operators and approvers that demonstrates how to read recommendations and exceptions.

Prove value fast: sandboxes, digital twins, champion/challenger tests

Week 10–12: run tightly scoped pilots that isolate causal impact. Use sandboxes or digital‑twin simulations where actions can be applied without business disruption, and run champion/challenger or A/B experiments where feasible. Measure against the acceptance criteria set in Week 0–2 and prioritize metrics that matter to the steering committee (revenue, cost savings, churn reduction, uptime).

Deliverables: experiment results with statistical confidence, a concise executive one‑pager showing realized vs. expected impact, and documented learnings that reduce model and operational risk.

Scale with MLOps + optimization engines embedded into workflows

Post‑pilot (day 90+): operationalize the stack for repeatability and scale. Hand over production pipelines with CI/CD, monitoring, alerting, drift detection, and automated retraining triggers. Embed the optimization engine into existing workflows (CRM, CPQ, MES) so recommendations execute with minimal friction, and set up quarterly review cadences to refresh objective weights and constraints as business priorities evolve.

Deliverables: production MLOps playbook, monitoring dashboards with business KPIs and model health metrics, SLAs for model performance, and a rollout roadmap for additional decision domains.

Because every step is tied to signed acceptance criteria, clear rollback paths, and measurable pilots, executives can watch value materialize while controls keep downside bounded — giving the team the credibility to move from a single pilot to enterprise‑wide decision automation and to quantify the financial outcomes leadership expects next.

Thank you for reading Diligize’s blog!

Are you looking for strategic advise?

Subscribe to our newsletter!

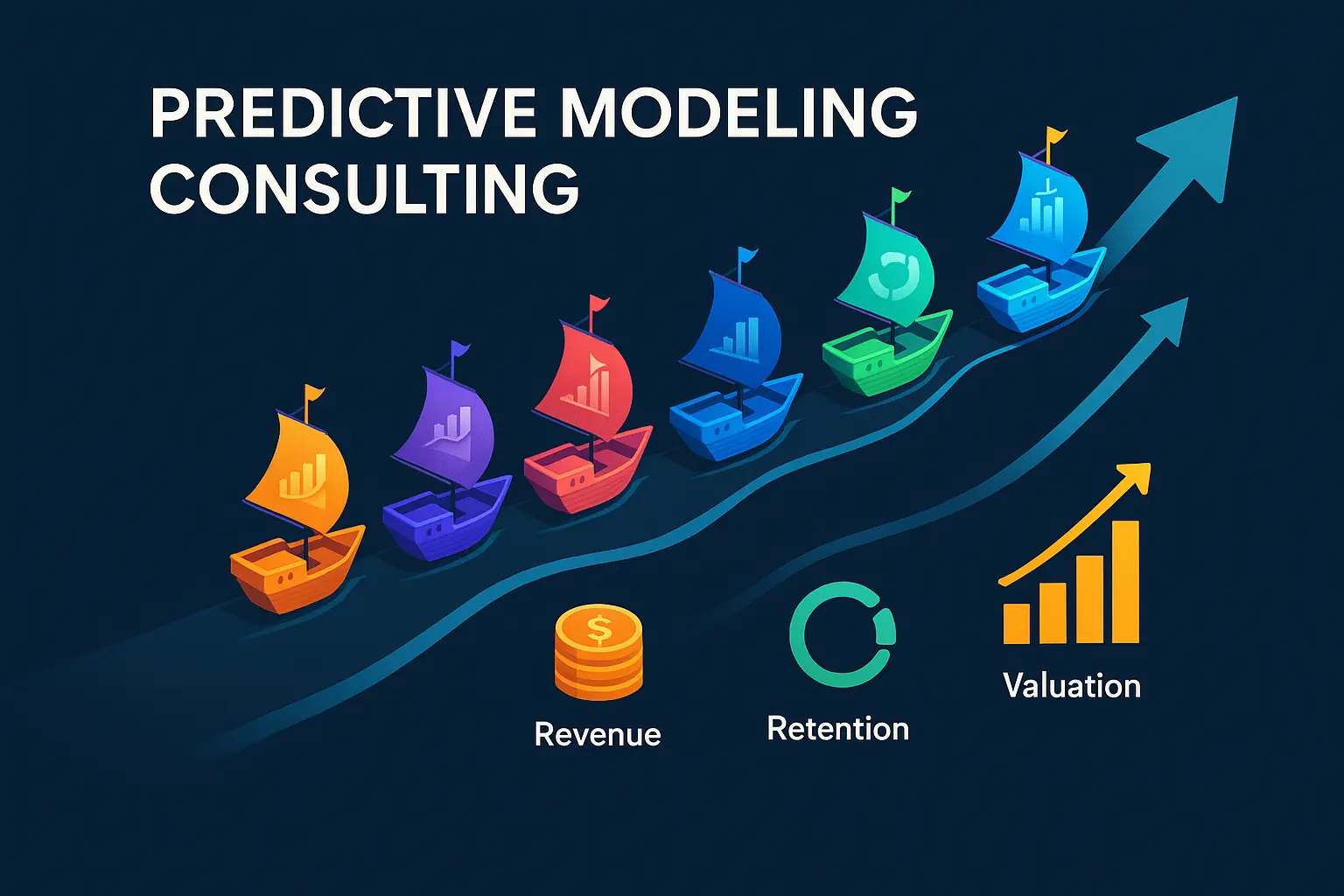

Outcomes that move valuation—not just dashboards

Prescriptive analytics succeeds when it translates models into measurable, repeatable financial outcomes that investors and acquirers care about—higher revenue, wider margins, lower churn, more predictable capital efficiency, and reduced operational risk. Below are the outcome categories that consistently shift valuation levers, with practical notes on how prescriptive decisioning delivers each result.

Revenue lift: +10–25% from dynamic pricing and recommendations

Embedding optimization into pricing engines, recommendation services, and deal configuration (CPQ) converts insights directly into higher order value and better margin capture. Prescriptive pricing adjusts to demand, competitor moves, and customer willingness to pay while bundling and next‑best‑offer logic increase average deal size and conversion—delivering recurring uplifts rather than one‑time analytics wins.

Retention: −30% churn, +10% NRR via prescriptive CS and call‑center assistants

Small changes in churn compound into large valuation effects. Prescriptive systems prioritize at‑risk accounts, recommend personalized interventions (discounts, feature nudges, success playbooks), and guide agents with context‑aware scripts and offers. When actions are instrumented and A/B tested, teams can prove causal lift in renewal and expansion metrics that directly improve recurring revenue multiples.

Manufacturing: −50% unplanned downtime, −40% defects, +30% output

Operations benefit from decisioning that respects hard constraints (capacity, lead times) while optimizing for throughput and cost. Prescriptive maintenance schedules, constrained production planning, and inventory optimization reduce emergency spend and scrap while increasing usable output—effects that strengthen margins, capital efficiency, and acquirer confidence in repeatable operations.

Workflow ROI: 112–457% over 3 years; 40–50% task automation

“AI co‑pilots and workflow automation deliver outsized returns — Forrester estimates 112–457% ROI over 3 years; automation can cut manual tasks by 40–50% and scale data processing by ~300x, driving rapid operational leverage.” Portfolio Company Exit Preparation Technologies to Enhance Valuation — D-LAB research

Beyond raw productivity, prescriptive co‑pilots and agents standardize decision quality and compress time to execution—turning variable human performance into consistent, auditable outcomes that scale. Those gains feed both cost reduction and faster product/feature iterations.

Cyber resilience: lower breach risk boosts buyer trust and valuation multiples

Reducing security risk is a valuation lever often overlooked by analytics teams. Prescriptive decisioning can tune access policies, prioritize patching and incident responses, and recommend containment actions that minimize expected loss. Demonstrable improvements in cyber posture and compliance reduce transaction risk and support higher exit multiples.

Across these categories the common thread is measurable causality: prescriptive projects that pair clear business metrics, controlled experiments, and executable integrations produce the evidence buyers and boards want to see. That evidence then guides selection criteria—both for the technical stack and for the partner who will help embed decisioning into the business—so you can confidently move from pilot wins to enterprise value creation.

Choosing a prescriptive analytics consulting partner

Picking the right partner is less about tech buzzwords and more about three things: decision science competence, repeatable playbooks that match your use cases, and the security & integration discipline to make recommendations operational and auditable. Below are practical selection criteria, questions to ask, and red flags to watch for when you evaluate firms.

Decision‑science first: clear objectives, constraints, and explainable trade‑offs

Look for teams that start by modeling the decision, not by building models for models’ sake. A strong partner will:

Questions to ask: How do you represent objective trade‑offs? Can you show an example of an explainable recommendation delivered to an operator?

Proven playbooks in pricing, retention, and operations (not just models)

Prefer partners who bring repeatable playbooks and outcome evidence for your domain. Proof points should include case studies that describe the decision being automated, the experimental design (A/B/champion‑challenger), and the realized business impact tied to clear KPIs.

Security posture: industry‑grade security, audits, and clear data handling

Security and IP protection must be baked into solution design. The partner should be able to explain: how customer data will be ingested and stored, who sees model artifacts, and what third‑party attestations or audit reports they can provide. Verify data residency, encryption, access controls, and incident response responsibilities before production work begins.

Red flags: reluctance to put data‑handling rules into the contract, vague answers about audits, or one‑off manual data processes that expose sensitive information.

Stack fit: ERP/MES/CRM integration, MLOps, and change management

Successful prescriptive systems need operational integration. Confirm the partner’s experience with your stack and their plan for production readiness:

Contracting for outcomes: KPIs, A/B guardrails, SLAs, and rollback plans

Structure agreements around measurable milestones and safety gates. Good contracts include:

Negotiate a payment schedule that balances vendor incentives with your risk—e.g., a fixed pilot fee, followed by outcome‑linked payments for scaled delivery.

Putting these criteria together will help you choose a partner who can both deliver early wins and embed prescriptive decisioning safely into your operations. With the right partner in place, the natural next step is a short, outcome‑focused program that proves value quickly and creates the operational foundation to scale decision automation across the business.