Private equity deals don’t win on spreadsheets alone. They win when a clear, time‑bound plan turns potential into repeatable cash and a sharpened exit story. This playbook is written for deal teams and operators who need a practical, 12‑month roadmap to accelerate value creation — starting in the first 100 days and building to a sale‑ready business.

In the next few minutes you’ll get a straight, no‑fluff view of what matters most: the pre‑deal checks that actually move multiples (IP, cyber, and real demand signals), a focused 90–100 day program that lifts retention, revenue, and margins, and the operations levers most funds underinvest in (manufacturing, supply chain, and automation). We’ll also show how to bake exit‑readiness into every initiative so the value you create is verifiable and saleable.

What this introduction will help you decide is simple: where to spend limited management time and capital in month 0–3, how to sequence work through months 4–12, and which metrics you should track every week to prove progress. Expect concrete targets like NRR, LTV/CAC, CAC payback, EBITDA margin and cash conversion to be the north stars — not vanity KPIs.

Read on if you want a playbook that prioritizes the four things buyers care about: defensible growth, predictable margins, operational resilience, and clean governance. No buzzwords, just step‑by‑step moves you can start on Day 1.

What private equity management consulting should achieve in 100 days and 12 months

PE vs. generalist consulting: outcomes, speed, and owner mindset

Private equity-focused consulting must be unapologetically outcome-driven: recommendations are judged by their ability to move an investment thesis, improve cash generation, or materially de‑risk exit timing. That contrasts with many generalist projects that prioritize analysis or capability design over immediate economic impact.

Speed matters. In the first 100 days the goal is to convert due‑diligence hypotheses into executable, measurable interventions that deliver visible P&L, working‑capital or retention improvements. Deliverables must be crisp: owner-ready decisions, prioritized roadmaps, and clear owners rather than long lists of possibilities.

The “owner mindset” is a distinguishing trait. Consultants working with PE teams must think like operators and acquirers — quantify what buyers will pay for, assign accountabilities, build tight governance, and bias toward actions that preserve optionality for an exit. Practicality wins over perfection: implementable pilots, rapid data checks, and decisions that can be scaled by the company team.

Lifecycle map: sourcing, diligence, 100‑day plan, scale‑up, exit

Consulting support should follow the investment lifecycle, aligning effort and scope to the stage of the deal. During sourcing and early diligence the focus is on read‑outs that change price or go/no‑go decisions (market signals, defensibility checks, and quick risk triage).

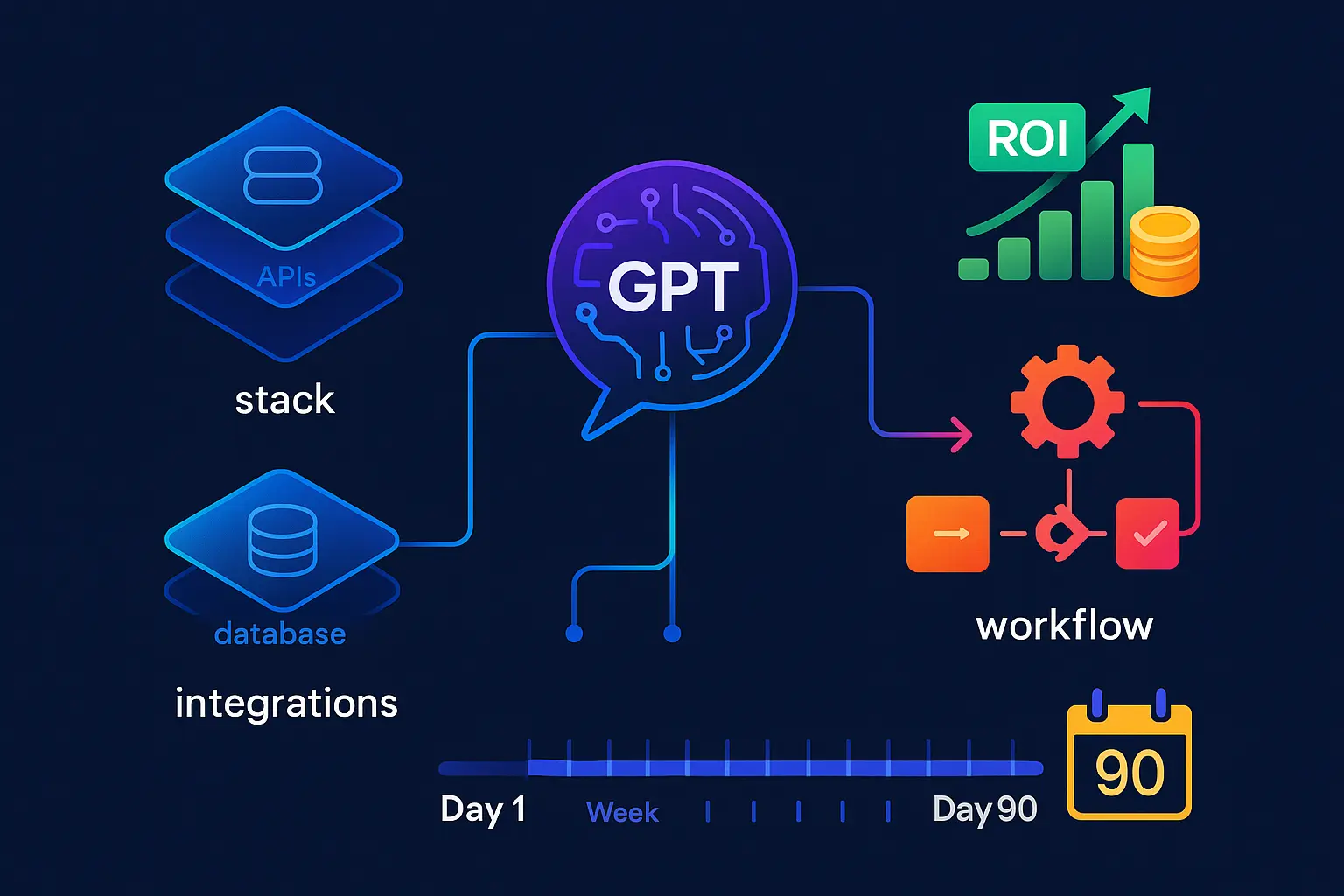

At close and through the first 100 days, consulting converts diligence findings into a prioritized 100‑day plan: immediate containment actions, revenue retention plays, working‑capital fixes, and staffing or vendor moves that unblock value. This plan must include tight KPIs, responsible leaders, and a timeline for proof points.

From months 3–12 the emphasis shifts to scale‑up: embed repeatable GTM motions, lift margins through operational levers, formalize governance and reporting, and build the “exit story” — a package of metrics, evidence and narrative buyers understand. Consulting should hand over a company that can operate the growth and cost levers without day‑to‑day external direction.

Targets that matter: NRR, CAC payback, EBITDA margin, cash conversion, cyber posture

Choose a compact KPI stack that maps directly to valuation: revenue retention and expansion, capital efficiency of growth, margin improvement, working‑capital conversion and risk posture. These are the metrics a buyer will read first — so they must be true, audited, and trending in the right direction.

In the first 100 days aim to baseline and stabilize: establish clean definitions, reconcile data sources, close critical reporting gaps, and deliver rapid wins that improve trajectory (for example, reduce churn risk, accelerate key receivables, or remediate the highest‑impact security gaps). Early wins demonstrate momentum and shrink near‑term exit risks.

Across 12 months, the objective is measurable improvement and institutionalization: lift net revenue retention through targeted retention and expansion programs; shorten CAC payback by making sales and marketing more efficient and by improving average deal value; expand EBITDA margin through pricing, SG&A automation and operational productivity; and improve cash conversion by optimizing payables, receivables and inventory flows. For cyber posture, move from patching to a documented, auditable control baseline so that buyers see sustained risk reduction.

Whatever the numeric targets, make them actionable: link each KPI to one owner, two levers, and a dashboard with a weekly or monthly cadence. That way progress is visible, interventions can be reweighted quickly, and the fund can demonstrate the causal connection between its actions and value uplift.

With these short‑ and medium‑term goals established, the next step is to translate diligence signals into the specific pre‑deal checks and technical fixes that preserve—or create—multiple expansion at exit. That is where a focused diagnostic on defensibility and demand signals becomes essential.

Pre‑deal diligence that moves the multiple: IP, cybersecurity, and demand signals

Validate defensibility: IP audits, licensing options, and moat scoring

“Intellectual Property (IP) represents the innovative edge that differentiates a company from its competitors and is one of the biggest factors contributing to a company’s valuation. IP can be licensed, franchised, or sold separately, providing additional revenue streams that enhance enterprise value — strong IP investments often lead to higher valuation multiples.” Deal Preparation Technologies to Enhance Valuation of New Portfolio Companies — D-LAB research

What to do: run a focused IP audit (registrations, ownership chain, trade secrets, third‑party risks), score the moat (technical uniqueness, switching costs, reproducibility), and size monetization options (licensing, OEM, SaaS add‑ons). Document gaps that reduce multiple — unclear ownership, thin documentation, or bespoke integrations that block scaling — and convert each gap into a priced remediation or warranty in the data room.

Prove resilience: ISO 27002, SOC 2, NIST 2.0 readiness as price drivers

“Cybersecurity readiness materially de‑risks deals: the average cost of a data breach in 2023 was $4.24M, GDPR fines can reach up to 4% of annual revenue, and NIST compliance has delivered commercial wins (e.g., By Light won a $59.4M DoD contract despite a competitor being $3M cheaper) — all signalling how frameworks can drive price and buyer trust.” Fundraising Preparation Technologies to Enhance Pre-Deal Valuation — D-LAB research

What to do: rather than a full certification up‑front, perform a pragmatic security maturity assessment mapped to investor concerns: inventory crown‑jewel data, quick wins (patching, logging, MFA), and an evidence plan (roadmap to SOC 2 or NIST artefacts). Quantify residual risk and estimated remediation cost so the fund can either negotiate price, require escrow/indemnity, or set a close‑to‑close remediation program that demonstrates momentum.

Read the market: AI customer sentiment and buyer‑intent data to size upside

Pre‑deal value is often as much about upside as downside. Use customer sentiment analytics and buyer‑intent signals to convert soft claims into measurable opportunity: estimate addressable demand, likely conversion lift, and required go‑to‑market investment. Sentiment analytics surface product gaps and feature priorities that accelerate retention and price realization; buyer‑intent platforms reveal latent demand and shorten sales cycles when deployed post‑close.

Practical outputs for a deal team: a conservative upside model (volume × conversion lift × ARPA uplift) built from intent‑signal cohorts; prioritized quick experiments (targeted outreach, pricing tests, recommendation engine pilots) with expected lift and CAC impact; and a one‑page go‑to‑market playbook showing how to capture the identified upside within 90 days.

Kill‑switches to price in: tech debt, data gaps, privacy & compliance risks

Every diligence should surface existential “kill‑switches” that materially erode value or block buyers: missing data lineage, large unaddressed legal claims, critical single‑vendor dependencies, or architecture that prevents analytics and automation. Convert each into one of three investor actions: (1) price the risk, (2) require remediation pre‑close, or (3) mandate an immediate post‑close sprint with clear milestones and escrowed funds.

Deliverables that move price: an executive brief with three items — quantified exposure, remediation cost and timeline, and impact on key exit metrics (NRR, CAC payback, EBITDA conversion). That brief turns abstract technical risk into financial terms buyers and LPs understand.

When IP, security and market signals are triaged this way — with priced remediation, documented upside and an executable capture plan — the fund can negotiate from strength. The next step is to convert those prioritized pre‑deal commitments into a tight, owner‑ready initial value‑creation plan that delivers measurable results in the first 90 days.

90‑day value creation plan for portfolio companies

Lift retention: GenAI CX assistants, success platforms, and personalization (CSAT +20–25%, churn −30%)

“GenAI CX assistants and customer‑success platforms deliver measurable retention lifts: CSAT improvements of +20–25%, customer‑churn reductions around 30%, and upsell/cross‑sell uplifts (~15%) — driving higher LTV and more predictable recurring revenue.” Deal Preparation Technologies to Enhance Valuation of New Portfolio Companies — D-LAB research

90‑day checklist

– Week 0: Baseline. Reconcile churn cohorts, NRR, renewal timing and top churn drivers. Create a one‑page retention dashboard.

– Week 1–3: Quick wins. Deploy a GenAI call/chat assistant for highest‑volume support flows, implement automated onboarding nudges, and fix top three friction points surfaced by customer feedback.

– Week 4–7: Pilot CS platform. Integrate product usage, CRM and support data into a customer‑health engine; run targeted playbooks for at‑risk accounts and expansion candidates.

– Week 8–12: Scale and measure. Convert successful pilots into runbooks, assign owners (CS lead + PM), lock cadence (weekly health review), and publish 30/60/90 day impact on churn and expansion.

Core KPIs: reduction in monthly churn rate, change in NRR, CSAT lift, number of expansion opportunities activated, and incremental LTV. Each KPI needs a named owner, baseline, and expected delta by day 90.

Grow volume: AI sales agents and intent‑led pipeline (close rates +32%, cycles −27–40%)

90‑day checklist

– Week 0: Prioritize accounts and segments where intent signals show highest concentration. Define conversion targets and CAC constraints for experiments.

– Week 1–4: Launch an intent data feed + AI lead enrichment into CRM. Set up automated scoring and routing so high‑intent leads get personalised outreach within hours.

– Week 5–8: Run an outbound sprint using AI agents to create personalized sequences, measure MQL→SQL conversion, and shorten follow‑up latency.

– Week 9–12: Optimize and scale top performing sequences, roll winning playbooks to the field, and embed performance into sales compensation or KPIs.

Core KPIs: incremental qualified pipeline, close rate on intent cohorts, average sales cycle length, and CAC payback on the experiment cohort.

Grow deal size: recommendation engines and dynamic pricing (AOV +30%, 10–15% revenue lift)

90‑day checklist

– Week 0–2: Identify high‑impact SKU/customer segments and instrument data (transactions, session behaviour, win/loss reasons).

– Week 3–6: Run an A/B recommendation engine for checkout or seller prompts (upsell bundles, add‑ons) and a parallel dynamic‑pricing pilot for a selectable SKU set.

– Week 7–10: Evaluate AOV, attachment rates and margin impact; iterate rules or models to protect gross margin.

– Week 11–12: Convert validated models into production rules for the highest ROI categories and hand over to commercial ops with monitoring alerts.

Core KPIs: change in average order value (AOV), attach/upsell rate, margin per transaction, and incremental revenue attributable to recommendation/pricing changes.

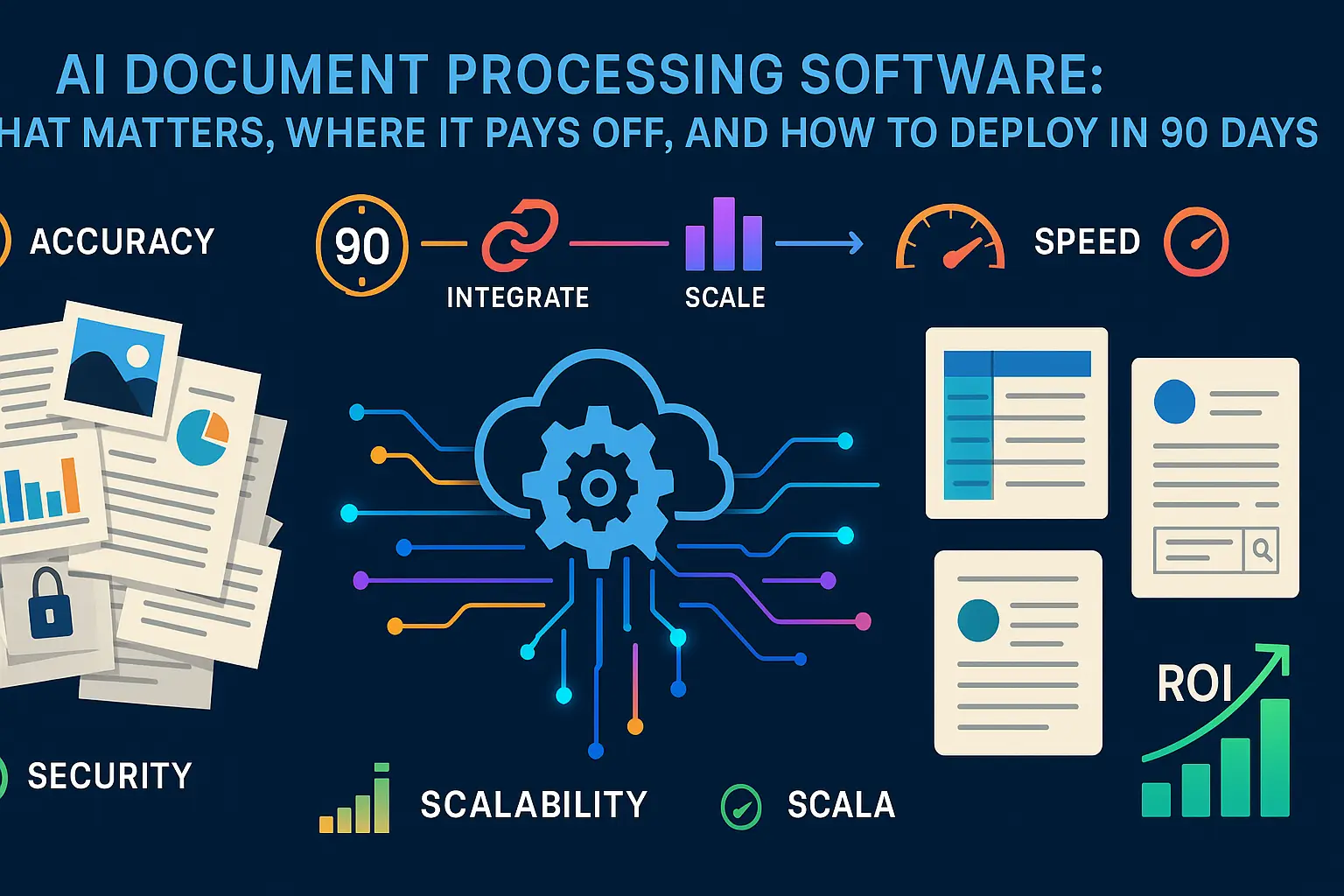

Automate SG&A: co‑pilots and agents that free capacity (40–50% task reduction, 112–457% ROI)

90‑day checklist

– Week 0: Map the top 20 repetitive SG&A tasks (finance close, procurement approvals, reporting, CRM data entry) and quantify hours and cost.

– Week 1–4: Deploy copilots/agents for two highest‑value tasks with a clear rollback plan: e.g., automated invoice matching and CRM enrichment.

– Week 5–8: Measure time saved, error rates, and redeploy freed capacity into revenue‑generating work or customer success.

– Week 9–12: Expand automation to adjacent tasks, implement governance for model updates, and calculate ROI for the first 90 days to fund next investments.

Core KPIs: FTE hours saved, task error reduction, cost per close (finance), lead response time, and realized ROI versus baseline run‑rate.

Execution rules across all plays: keep experiments small and measurable, assign a single accountable owner, require a hypothesis + success criteria before launch, and protect data quality so measurement is reliable. In parallel, tie every 90‑day initiative to one line in the exit narrative (revenue uplift, margin expansion or risk reduction) so the fund can show causal value creation.

These commercial and productivity plays set the stage for deeper operational improvements — next we look at manufacturing and supply‑chain levers that funds often undervalue when building longer‑term margin expansion plans.

Thank you for reading Diligize’s blog!

Are you looking for strategic advise?

Subscribe to our newsletter!

Operations edge most funds underrate: manufacturing and supply chain levers

Predictive and prescriptive maintenance to unlock capacity

Maintenance is not just a cost centre — when done right it frees hidden capacity, stabilizes throughput, and protects margin. Start with an asset‑criticality map, instrument the highest‑risk equipment for condition monitoring, and move from time‑based servicing to condition‑based alerts. Prioritise fixes that remove recurring unplanned stops and put in place simple prescriptive actions operators can follow when an alert fires.

Practical first steps: baseline current reliability and failure modes, deploy sensors or connect PLC/SCADA signals where needed, run a short prescriptive pilot on one production line, then codify the failure‑to‑action flows so improvements stick.

Core metrics to track: overall equipment effectiveness (OEE), unplanned downtime, mean time between failures (MTBF), and maintenance backlog — each with a named owner and an agreed cadence for review.

Process optimization, additive manufacturing, and digital twins

Process optimisations remove waste and improve quality without heavy capex. Combine simple lean experiments (bottleneck reduction, standard work, poka‑yoke) with selective digital tools: simulation or a lightweight digital twin to test sequence changes, and additive techniques where customization or tooling cost is a blocker to faster iterations.

Run short value‑led experiments: map the value stream, identify the most impactful defect or throughput cause, pilot a focused fix, measure delta, and embed the change into SOPs. Use digital twins for scenario testing only where those scenarios meaningfully reduce risk or time to market.

Core metrics to track: defect rate, first‑pass yield, cycle time, and time‑to‑market for new SKUs.

Lights‑out cells and energy/material efficiency that lift margins

Automation investments should be judged by margin uplift and risk reduction, not novelty. Target “lights‑out” or high‑automation cells where volumes and part variability justify the investment and where automation removes labour constraints on scale. Parallel to automation, audit energy and material flows for low‑cost efficiency gains (process heating, compressed air, scrap reduction).

Implement a two‑track approach: quick operational changes (material rework rules, targeting high‑waste processes) and a staged automation roadmap with business cases, pilot cell, and rollout criteria tied to return thresholds and risk tolerance.

Core metrics to track: throughput per shift, energy per unit, yield after automation, and total cost per unit.

Resilient flows: inventory and supply‑chain planning

Supply‑chain resilience is a direct lever on working capital and service levels. Move beyond one‑off vendor fixes: build a demand‑driven planning loop that links forecasts, safety stock rules, and supplier lead‑time variability to actionable reorder policies. Where complexity is high, introduce constrained optimisation (S&OP with scenario testing) rather than ad‑hoc fixes.

Quick wins include cleaning master data, short‑listing critical suppliers for dual sourcing or inventory buffering, and instituting a rapid escalation path for supply disruptions. Longer runs should focus on network optimisation and contingency playbooks.

Core metrics to track: inventory days or turns, fill rate/service level, supplier on‑time in full (OTIF) and working capital tied to inventory.

How to prioritise and scale operational levers

Operational programmes win when they are prioritized by economic impact and ease of implementation. Use a simple heat‑map: value at stake vs. ease/rapidity of delivery. For each candidate lever create a 30/60/90 plan with owner, success criteria, and an escalation route for blockers.

Insist on measurable pilots, rapid feedback loops with ops teams, and a governance rhythm that reviews outcomes against the fund’s KPI stack. Avoid large, unfunded transformation efforts with long payback periods unless they are essential to the exit story and have committed funding.

When these manufacturing and supply‑chain initiatives show sustainable uplifts in throughput, margin and working capital, they become powerful, credible components of the fund’s operational improvement narrative and a foundation for the metrics, governance, and evidence package required by buyers at exit.

Build exit‑readiness from day one: metrics, governance, and proof

KPI stack and cadence: NRR, LTV/CAC, payback, OEE, price realization, automation ROI

Define a compact KPI stack that maps directly to valuation drivers and the eventual buyer’s checklist. Start with a single-sheet metrics framework that shows baseline, target, owner and the lead levers for each metric (for example: net revenue retention, LTV/CAC, CAC payback, OEE for production assets, price realization and automation ROI).

Operationalise the stack by agreeing a reporting cadence (weekly operational huddles, monthly board pack, quarterly review for strategic bets). Ensure each metric has: (1) a single accountable owner, (2) a documented definition and data source, and (3) a validated measurement pipeline so numbers are auditable.

Make dashboards actionable: surface variance to plan, highlight top three drivers for any movement, and link every metric to a one‑page playbook that lists experiments, expected impact and confidence. This is how small interventions become credible lines in the exit narrative.

Compliance and data room: SOC 2 reports, ISO evidence, audit trails, customer references

Treat the data room and compliance artefacts as value‑creation assets, not last‑minute chores. Maintain a living evidence repository with current copies of key certificates, audit trails, change logs, third‑party security assessments and customer references that corroborate revenue and growth claims.

Practical steps: catalogue required artefacts by buyer type (strategic, financial, public), run a gap analysis, assign remediation owners and timelines, and create an “evidence index” that maps every exit claim to supporting documents. For ongoing compliance, implement simple evidence capture processes so artefacts are produced as part of business as usual rather than retrofitted under time pressure.

Exit story: quantifying AI‑driven growth, margin expansion, and risk reduction

Build the exit story around causal, verifiable outcomes. For technology or AI initiatives this means moving beyond “proof of concept” to a quantified before/after: what changed (revenue, margin, churn), why it changed (which features/plays), and how the improvement will scale.

Use a three‑part template: (1) the hypothesis (what the tech will enable), (2) the experiment and evidence (pilot design, measurement approach, results), and (3) the scaling path (cost to scale, runway, forecasted incremental EBITDA). Include sensitivity analysis and clear documentation of assumptions so potential buyers can stress test the case quickly.

Model risk, privacy, and change management: controls that reassure buyers

Buyers expect to see controls around models, data and organisational change. For models, keep versioned notebooks or model cards that document lineage, training data, performance metrics and known limitations. For privacy, maintain DPIAs, data‑processing agreements and a clear record of consent/usage boundaries.

Change management matters as much as tech. Produce a change log and adoption metrics showing who uses new tools, how workflows changed, and concrete evidence that the organisation can operate the capability without heavy external support. Combine technical controls (access, logging, rollback plans) with human controls (training, escalation paths, governance committee minutes) so risk reductions are demonstrable.

Turning readiness into a saleable package

Package metrics, compliance artefacts and the exit story into a concise seller’s pack: a one‑page investment thesis, a five‑page operational evidence memo, and a supporting data room with audited numbers and third‑party corroboration. That trio allows bidders to move quickly and with confidence — shortening sale timelines and reducing discounting for perceived risk.

With these foundations in place, teams can more confidently prioritise the commercial and operational levers that drive topline acceleration and margin expansion, knowing that every intervention is already mapped back to the metrics and evidence buyers will demand.