Why this matters right now. Large language models like ChatGPT aren’t just a flashy demo — when wired into the right processes they can do real work: answer customers, draft and personalize outreach, summarize meetings, and free skilled people to focus on judgment and relationship-building. This guide shows how to turn those capabilities into high‑ROI workflows, the technical stack you’ll actually need, and a practical 90‑day plan to get from idea to production without wasting months on experimentation.

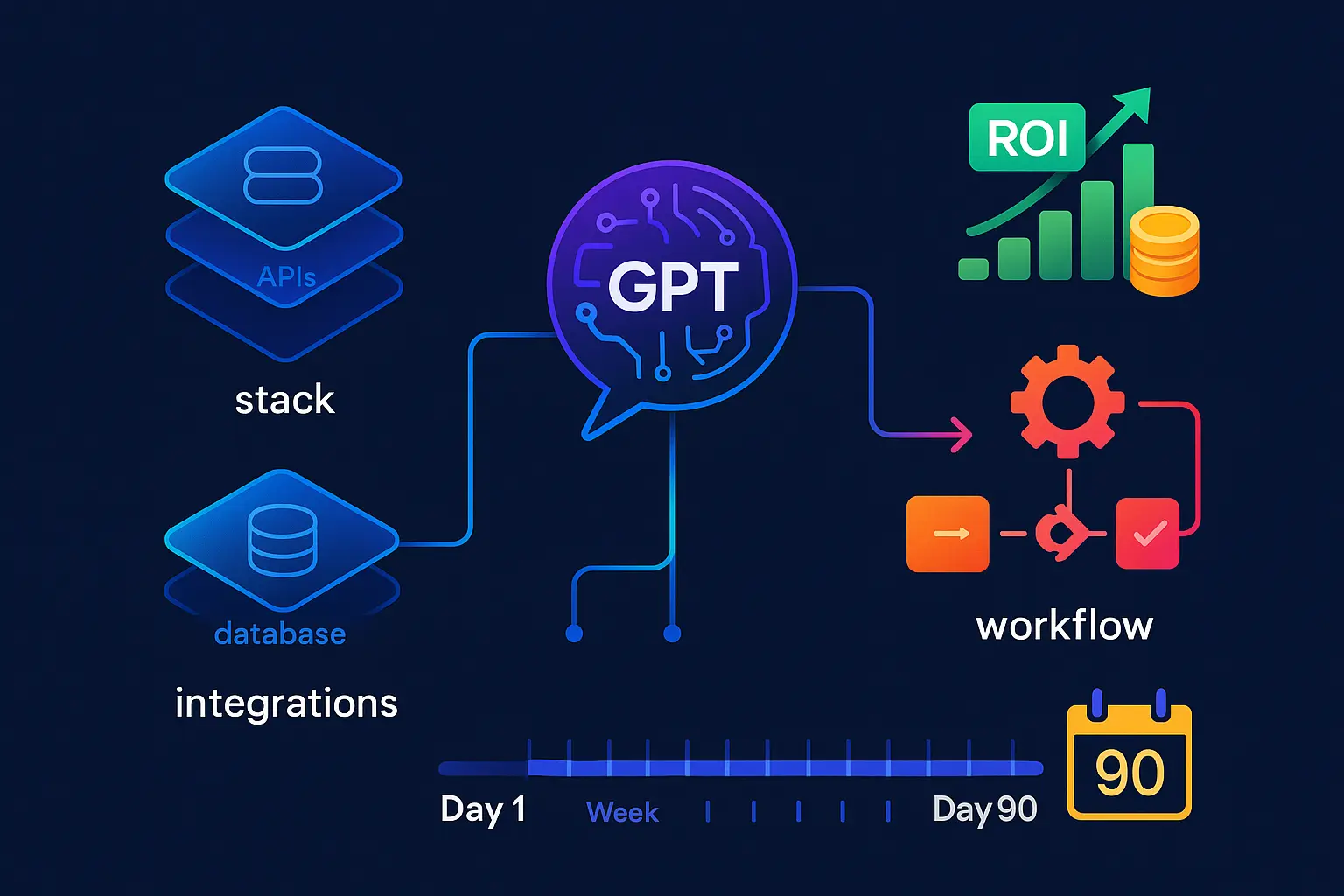

What you’ll find in this post. We start with plain-English definitions and simple examples so you and your team can decide which tasks are a fit for LLM automation and which are better left to scripts or humans. Then we walk through the minimum viable stack — model + retrieval + tools + guardrails — and real orchestration options (Zapier, Make, Power Automate, or direct API). You’ll also get three battle-tested automations that tend to pay back fastest, and a day-by-day 90‑day rollout plan that focuses on measurable value, safety, and cost control.

No fluff, just practical tradeoffs. Automation isn’t magic. I’ll call out where LLMs shine (language-heavy, ambiguous tasks that benefit from context) and where they struggle (strict numeric crunching, unrecoverable actions without human review). You’ll learn the metrics to track from day one — CSAT, average handle time, deflection rate, conversion lift, and hours saved — so you can prove impact instead of guessing.

Who this is for. If you’re a product manager, ops lead, head of support, growth marketer, or an engineer asked to “add AI,” this guide gives you a clear path: choose one high‑value use case, build a safe MVP, and scale in 90 days. If you already have AI experiments, you’ll get concrete guardrails for hallucinations, prompt injections, PII handling, and monitoring.

Quick preview — what pays back fastest. In the sections ahead we dig into three practical automations that commonly deliver fast returns: customer-service assistants (self‑service + agent copilot), B2B sales agents with hyper‑personalized content, and education-focused tutors/assistants. For each, you’ll see the architecture, the expected benefits, and the short checklist to launch an MVP.

Ready to build something useful fast? Keep reading — the first 30 days focus on picking the right use case and establishing baseline metrics so every decision after that is measured and reversible.

What ChatGPT automation is—and when it works best

Plain-English definition and quick examples (from replies to full workflows)

ChatGPT automation means using a conversational large language model as a programmable component inside your workflows: not just a chat window, but a step that reads context, generates language, calls tools or APIs, and routes outcomes. At one end of the spectrum that looks like short, instant replies — e.g., drafting an email, rephrasing a policy, or answering a simple customer question. At the other end it becomes a multi-step automation: gather customer history, summarize the issue, propose a solution, call a knowledge-base API to fetch a link, and either resolve the case or hand it to a human with an evidence-backed summary.

Examples you might recognize include an assistant that auto-composes personalized outreach, a help-bot that triages support tickets and creates prioritized tickets for agents, or an internal assistant that turns meeting notes into action items and calendar tasks. The common thread is language-driven decision-making combined with procedural steps (look up, synthesize, act, escalate).

Fit test: tasks for LLMs vs. scripts (ambiguity, context, language-heavy work)

Deciding whether to use ChatGPT automation or a classic script comes down to the nature of the task.

Choose an LLM when the work requires understanding ambiguous input, maintaining or referencing conversational context, producing or interpreting natural language, summarizing varied sources, or making judgement calls where exact rules are impractical. LLMs excel at personalization, intent detection, drafting and rewriting, and bridging gaps where data is partial or noisy.

Choose deterministic scripts or rule-based automations when outcomes must be exact, auditable, and repeatable — e.g., calculations, fixed-format data transforms, binary routing based on precise thresholds, or actions with hard regulatory constraints. Scripts are usually cheaper, faster, and easier to validate for purely transactional logic.

In practice the best systems combine both: use scripts for gating, validation, and connector work (authentication, database writes, rate-limited API calls) and let the LLM handle interpretation, language generation, and context-aware decisioning. A short checklist to decide: is the input ambiguous? Is context critical? Is language central to value? If yes, the LLM is a good fit; if no, prefer scripts or hybrid flows.

Expected gains: faster responses, higher CSAT, more revenue (benchmarks inside)

When you apply ChatGPT automation to the right use cases, the typical benefits are faster response cycles, improved customer and user experience, and efficiency gains that free employees for higher-value work. Those improvements translate into operational metrics you can measure: reduced time-to-first-reply, increased self-service resolution, shorter agent handling time, and clearer, more consistent communication that raises satisfaction scores and conversion rates.

To capture value predictably, define a baseline for each metric before you launch a pilot, then track the same metrics during and after rollout. Focus on a small set of KPIs that map directly to business outcomes (for example: response latency, resolution rate, customer satisfaction, agent time saved, and pipeline influenced). Use A/B pilots or controlled rollouts so you can attribute improvements to the automation rather than other changes.

Remember that gains compound: faster, clearer responses drive better user sentiment, which can lower repeat contacts and boost lifetime value; automations that remove busywork also increase employee capacity for outreach, conversion, and retention. The practical next step is choosing the right building blocks and orchestration approach so these benefits scale safely and measurably.

With these definitions and a clear fit test in hand, you’re ready to map technical components to the use case you picked and design a stack that balances speed, safety, and observability — which is what we’ll cover next.

Your ChatGPT automation stack

Core building blocks: model + retrieval + tools + guardrails

Think of the stack as four layers that work together. The model layer is the LLM you call for reasoning and language generation; choose a model that balances capability, latency, and cost for your use case. The retrieval layer (a vector store or search index) feeds the model focused context from manuals, CRM records, or knowledge bases so responses are grounded in your data. The tools layer provides external actions: API calls, database writes, ticket creation, calendar updates, or third‑party integrations that let the model move from advice to action. The guardrails layer wraps the whole system with safety and correctness checks — input sanitization, content filters, verification prompts, and deterministic validators that catch hallucinations and stop unsafe actions.

Design patterns that make these layers reliable include separation of concerns (keep retrieval and tools outside the model prompt), short-term context windows for conversational state, and an evidence-first response style where the model cites retrieved passages or logs the data used to make a decision.

Orchestration options: Power Automate plugin, Zapier, Make, and direct API

There are three pragmatic orchestration approaches to choose from depending on speed-to-value and engineering capacity. Low-code platforms let product teams assemble flows and integrations quickly using prebuilt connectors; they’re ideal for rapid pilots and non‑engineering owners. Integration platforms (iPaaS) provide richer routing, error handling, and enterprise connectors for more complex multi-step automations. Direct API orchestration gives engineers the finest control — lower latency, better cost tuning, and custom observability — and is the route to production at scale.

Mix-and-match: start with low-code to validate the business case, then port high-volume or security-sensitive flows to direct API implementations once requirements and metrics are stable.

Data and security: PII redaction, RBAC, audit logs, least‑privilege connectors

Security design must be baked into every layer. Redact or tokenise PII before it reaches the model, apply role-based access controls so only authorized services and users can invoke sensitive automations, and enforce least-privilege for connectors that read or write production systems. Maintain immutable audit logs of model inputs, retrieved context, and outbound actions so you can investigate errors and measure traceability.

Operationally, add rate limits and cost controls to prevent runaway usage, and isolate environments (dev/test/prod) with separate keys and datasets. Where legal or compliance risk exists, route outputs through a human‑in‑the‑loop approval step or block certain actions entirely.

Instrument from day one: CSAT, AHT, deflection, conversion, time saved

Instrumenting outcomes from the start turns a shiny prototype into measurable ROI. Pick a small set of primary KPIs that tie to business goals — for support this might be first-response time, average handle time (AHT), self-service resolution rate (deflection), and CSAT; for sales it will include conversion, pipeline influenced, and time saved per rep. Capture baseline values, then track changes during A/B tests or staged rollouts.

Log telemetry at the workflow level (latency, error rate, tool-call success), at the content level (which retrieval hits were used, whether the model cited sources), and at the outcome level (customer rating, ticket reopen rate, revenue impact). Use that data to close the feedback loop: refine prompts, adjust retrieval, tighten guardrails, and migrate winning automations from pilot to production.

With a clear, secure stack and the right metrics in place, you can move quickly from an idea to repeatable automations and then optimize for scale — next we’ll apply this foundation to concrete, high‑ROI automations you can pilot first.

3 battle-tested ChatGPT automations that pay back fast

Customer service: GenAI self‑service agent + call‑center copilot

What it does: a retrieval-augmented chat agent handles routine inquiries, surfaces policy or order data, and either resolves the case or creates a concise, evidence-backed summary for an agent. A companion call‑center copilot listens to live calls (or post-call transcripts), pulls context, suggests responses and next steps, and generates clean post-call wrap-ups so agents spend less time searching for information.

Why it pays back: automating first-line resolution and speeding responses reduces operational load and boosts customer sentiment. For example, D-Lab research reports that “80% of customer issues resolved by AI (Ema).” KEY CHALLENGES FOR CUSTOMER SERVICE (2025) — D-LAB research

And when the system supports agents in real time, the research documents a dramatic improvement in responsiveness: “70% reduction in response time when compared to human agents (Sarah Fox).” KEY CHALLENGES FOR CUSTOMER SERVICE (2025) — D-LAB research

Operational tips: start with a narrow taxonomy (billing, order status, returns), connect a vetted knowledge base, and keep a human‑in‑the‑loop fallback for ambiguous or regulatory cases. Instrument ticket deflection, escalation rate, and reopen rate so you can optimize prompts and retrieval quality.

B2B sales & marketing: AI sales agent + hyper‑personalized content

What it does: AI sales agents qualify leads, enrich CRM records, draft personalized outreach, and sequence follow-ups. Paired with a content engine that generates landing pages, emails, and proposals tailored to account signals, the automation reduces manual busywork and increases touch relevance across accounts.

Benchmarks from D‑Lab show concrete efficiency lifts: “40-50% reduction in manual sales tasks. 30% time savings by automating CRM interaction (IJRPR).” B2B Sales & Marketing Challenges & AI-Powered Solutions — D-LAB research

Revenue and cycle improvements are reported as well: “50% increase in revenue, 40% reduction in sales cycle time (Letticia Adimoha).” B2B Sales & Marketing Challenges & AI-Powered Solutions — D-LAB research

Operational tips: integrate the agent with your CRM using least‑privilege connectors, validate suggested updates with reps before committing, and A/B test personalized creative vs. standard content to prove lift. Track pipeline influenced, conversion per touch, and time saved per rep to quantify ROI.

Education: virtual teacher and student assistants

What it does: in education, ChatGPT automations can draft lesson plans, generate formative assessments, summarize student work, and provide on-demand tutoring or study guides. For administrative staff, bots can automate routine inquiries, enrollment checks, and scheduling.

Why it pays back: these automations free up instructor time for high‑value activities (mentoring, live instruction) and keep students engaged with tailored explanations and practice. Early pilots often report improved turnaround on grading and faster response to student questions, which increases engagement without a large staffing increase.

Operational tips: preserve academic integrity by integrating plagiarism checks and prompt‑level constraints, keep sensitive student data isolated and redacted before any model calls, and offer teacher approval gates for assessment content. Begin with a single course or department to measure educator time saved and student satisfaction before scaling.

These three patterns—self‑service + copilot for support, AI sales agents + personalized content for B2B, and virtual assistants for education—share a common rollout recipe: start small, measure tightly, and move high‑confidence flows into production. Next, we’ll lay out a stepwise rollout plan with milestones, roles, and the guardrails you need to scale safely and measure impact.

Thank you for reading Diligize’s blog!

Are you looking for strategic advise?

Subscribe to our newsletter!

90‑day implementation blueprint + guardrails

Days 0–30: pick one use case, baseline metrics, define success

Choose one narrow, high-impact use case you can measure (e.g., ticket triage, lead enrichment, lesson-plan drafts). Run a quick feasibility check with stakeholders to confirm data availability and minimal compliance hurdles. Define success with 3–4 clear KPIs (baseline + target) such as time‑to‑first‑reply, deflection rate, conversion uplift, or hours saved per employee.

Assemble a small cross‑functional team: a product owner, an engineer or integration lead, a subject‑matter expert, and an agent or teacher representative depending on the domain. Create a lightweight delivery plan with weekly checkpoints, a decision rule for “go/no‑go” after day 30, and a risk register for data access, PII, and escalation requirements.

Days 31–60: build MVP, retrieval setup, human‑in‑the‑loop, red‑team prompts

Build a minimally viable automation that proves the flow end‑to‑end. Implement retrieval augmentation against a constrained, vetted knowledge source and wire the model to the smallest set of tool actions required (e.g., read KB, draft reply, create ticket). Keep the deployment scope limited to a small user cohort or a subset of traffic.

Operationalize human‑in‑the‑loop workflows: explicit confidence thresholds that route uncertain outputs to reviewers, templates for quick agent review, and clear SLAs for escalation. Run a prompt red‑team exercise where engineers and SMEs intentionally probe prompts and retrieved context to uncover hallucinations, privacy leaks, and edge cases. Capture failures and iterate rapidly on prompt engineering and retrieval filters.

Days 61–90: productionize, routing and escalation, SLAs, cost controls

Migrate the highest‑confidence flows to a production environment with proper keys, environment separation, and monitoring dashboards. Define routing and escalation rules for every failure mode: model uncertainty, retrieval miss, API failure, or compliance block. Set operational SLAs for human review windows, response times, and incident handling.

Put cost controls in place: per‑flow budgets, rate limits, and alerts for unusual usage. Automate basic rollback or throttling mechanisms and ensure observability for latency, error rates, and tool‑call success. Train end users and support teams on how the automation behaves, how to interpret model outputs, and how to trigger fallbacks.

Quality guardrails: hallucination traps, prompt‑in‑jection defenses, compliance and change management

Apply layered guardrails rather than relying on a single defence. Pre‑call sanitization and PII redaction prevent sensitive data reaching the model. Retrieval validation enforces that only vetted documents can be surfaced; prefer document-level provenance and short evidence snippets. Post‑response validators check facts that matter (e.g., pricing, SLA commitments) and block or flag outputs that fail deterministic checks.

Defend against prompt injection by canonicalizing user inputs, stripping control tokens, and running safety filters before concatenation into prompts. Maintain a signed template repository for prompts and limit dynamic prompt assembly to preapproved building blocks. Require a human approval step for any automation that can commit money, change contracts, or perform irreversible actions.

For compliance and change management, document versions of prompts, retrieval sources, and model settings; store audit logs of inputs, retrieval hits, and outbound actions; and run periodic reviews with legal and security teams. Use an incremental rollout policy with clearly defined acceptance criteria for each stage and a rollback plan for regressions.

By following this 90‑day cadence—focused pilots, rigorous testing, controlled production, and layered guardrails—you create repeatable, observable automations that deliver value quickly while keeping risk manageable. The next step is to map those outcomes back to your stack and instrumentation so you can scale the winning flows across teams and systems.